Two-person Graph Convolutional Network for Skeleton-based Human Interaction Recognition

Paper and Code

Aug 12, 2022

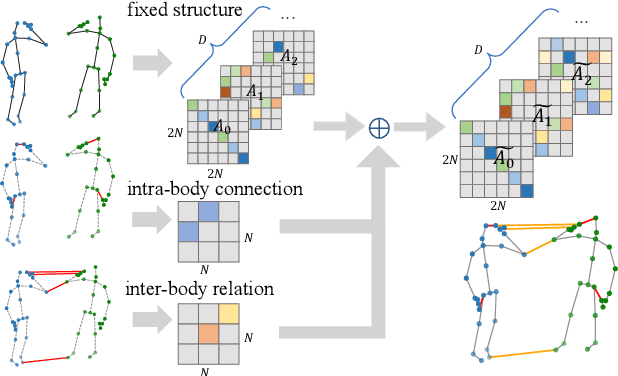

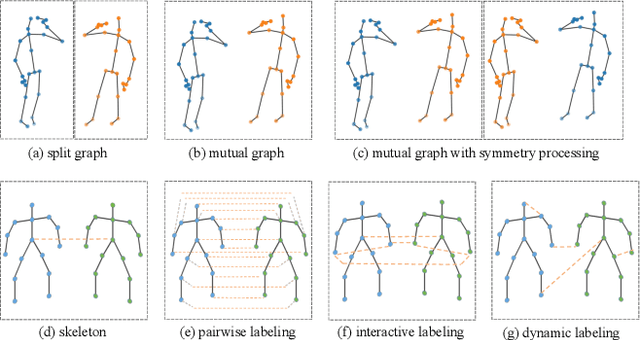

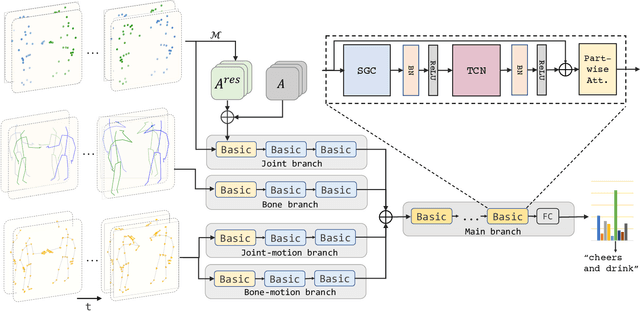

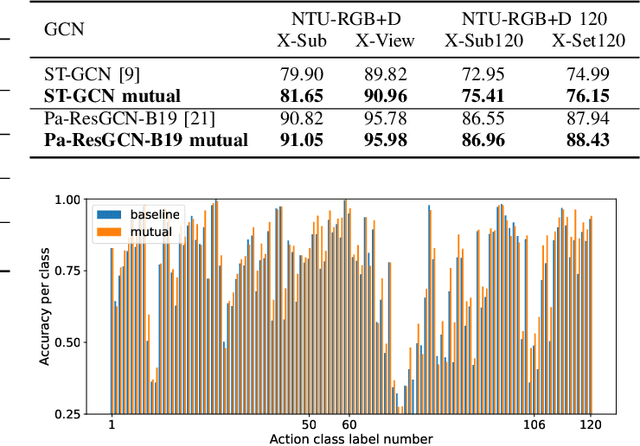

Graph Convolutional Network (GCN) outperforms previous methods in the skeleton-based human action recognition area, including human-human interaction recognition task. However, when dealing with interaction sequences, current GCN-based methods simply split the two-person skeleton into two discrete sequences and perform graph convolution separately in the manner of single-person action classification. Such operation ignores rich interactive information and hinders effective spatial relationship modeling for semantic pattern learning. To overcome the above shortcoming, we introduce a novel unified two-person graph representing spatial interaction correlations between joints. Also, a properly designed graph labeling strategy is proposed to let our GCN model learn discriminant spatial-temporal interactive features. Experiments show accuracy improvements in both interactions and individual actions when utilizing the proposed two-person graph topology. Finally, we propose a Two-person Graph Convolutional Network (2P-GCN). The proposed 2P-GCN achieves state-of-the-art results on four benchmarks of three interaction datasets, SBU, NTU-RGB+D, and NTU-RGB+D 120.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge