Two is a crowd: tracking relations in videos

Paper and Code

Aug 11, 2021

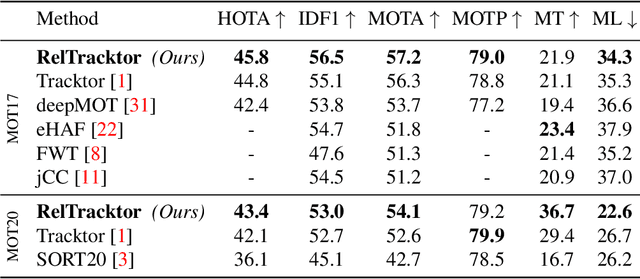

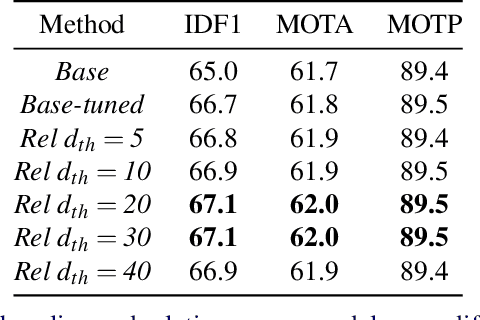

Tracking multiple objects individually differs from tracking groups of related objects. When an object is a part of the group, its trajectory depends on the trajectories of the other group members. Most of the current state-of-the-art trackers follow the approach of tracking each object independently, with the mechanism to handle the overlapping trajectories where necessary. Such an approach does not take inter-object relations into account, which may cause unreliable tracking for the members of the groups, especially in crowded scenarios, where individual cues become unreliable due to occlusions. To overcome these limitations and to extend such trackers to crowded scenes, we propose a plug-in Relation Encoding Module (REM). REM encodes relations between tracked objects by running a message passing over a corresponding spatio-temporal graph, computing relation embeddings for the tracked objects. Our experiments on MOT17 and MOT20 demonstrate that the baseline tracker improves its results after a simple extension with REM. The proposed module allows for tracking severely or even fully occluded objects by utilizing relational cues.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge