Two Approaches to Supervised Image Segmentation

Paper and Code

Aug 03, 2023

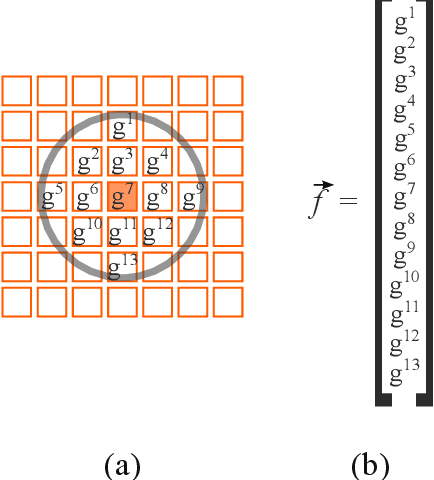

Though performed almost effortlessly by humans, segmenting 2D gray-scale or color images in terms of regions of interest (e.g.~background, objects, or portions of objects) constitutes one of the greatest challenges in science and technology as a consequence of the involved dimensionality reduction(3D to 2D), noise, reflections, shades, and occlusions, among many other possible effects. While a large number of interesting related approaches have been suggested along the last decades, it was mainly thanks to the recent development of deep learning that more effective and general solutions have been obtained, currently constituting the basic comparison reference for this type of operation. Also developed recently, a multiset-based methodology has been described that is capable of encouraging image segmentation performance while combining spatial accuracy, stability, and robustness while requiring little computational resources (hardware and/or training and recognition time). The interesting features of the multiset neurons methodology mostly follow from the enhanced selectivity and sensitivity, as well as good robustness to data perturbations and outliers, allowed by the coincidence similarity index on which the multiset approach to supervised image segmentation is based. After describing the deep learning and multiset neurons approaches, the present work develops two comparison experiments between them which are primarily aimed at illustrating their respective main interesting features when applied to the adopted specific type of data and parameter configurations. While the deep learning approach confirmed its potential for performing image segmentation, the alternative multiset methodology allowed for enhanced accuracy while requiring little computational resources.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge