TripleE: Easy Domain Generalization via Episodic Replay

Paper and Code

Oct 04, 2022

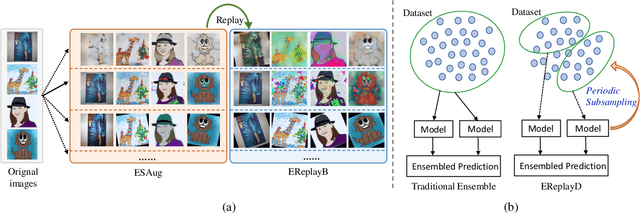

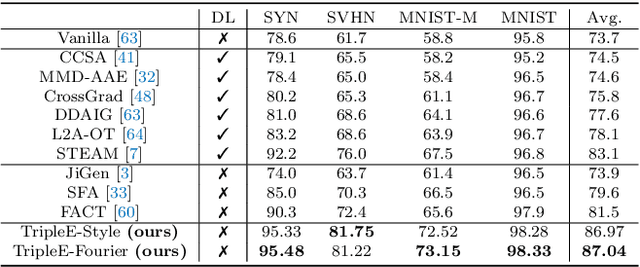

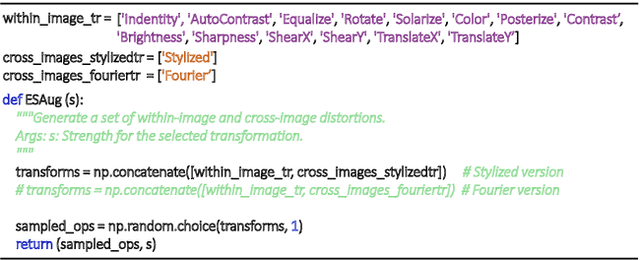

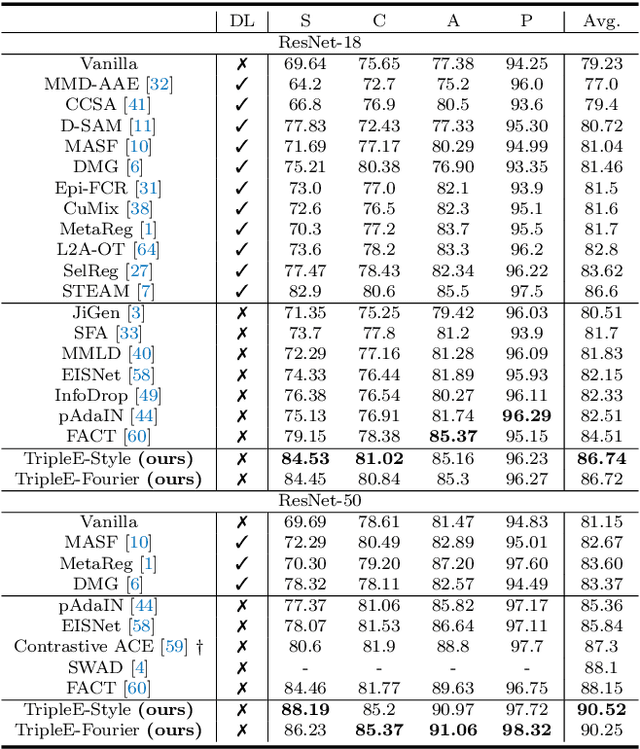

Learning how to generalize the model to unseen domains is an important area of research. In this paper, we propose TripleE, and the main idea is to encourage the network to focus on training on subsets (learning with replay) and enlarge the data space in learning on subsets. Learning with replay contains two core designs, EReplayB and EReplayD, which conduct the replay schema on batch and dataset, respectively. Through this, the network can focus on learning with subsets instead of visiting the global set at a glance, enlarging the model diversity in ensembling. To enlarge the data space in learning on subsets, we verify that an exhaustive and singular augmentation (ESAug) performs surprisingly well on expanding the data space in subsets during replays. Our model dubbed TripleE is frustratingly easy, based on simple augmentation and ensembling. Without bells and whistles, our TripleE method surpasses prior arts on six domain generalization benchmarks, showing that this approach could serve as a stepping stone for future research in domain generalization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge