TreeCaps: Tree-Based Capsule Networks for Source Code Processing

Paper and Code

Sep 05, 2020

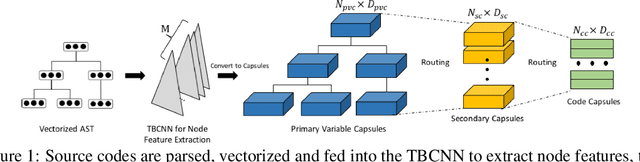

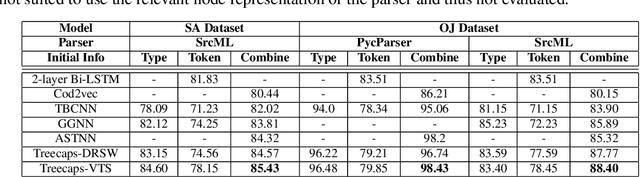

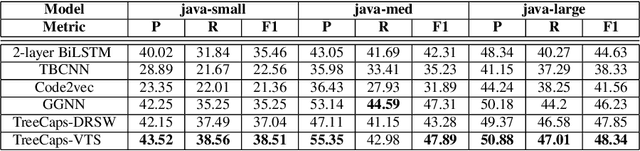

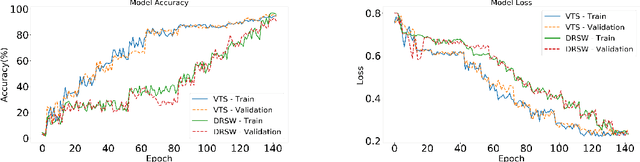

Recently program learning techniques have been proposed to process source code based on syntactical structures (e.g., Abstract Syntax Trees) and/or semantic information (e.g., Dependency Graphs). Although graphs may be better at capturing various viewpoints of code semantics than trees, constructing graph inputs from code needs static code semantic analysis that may not be accurate and introduces noise during learning. Although syntax trees are precisely defined according to the language grammar and easier to construct and process than graphs, previous tree-based learning techniques have not been able to learn semantic information from trees to achieve better accuracy than graph-based techniques. We propose a new learning technique, named TreeCaps, by fusing together capsule networks with tree-based convolutional neural networks, to achieve learning accuracy higher than existing graph-based techniques while it is based only on trees. TreeCaps introduces novel variable-to-static routing algorithms into the capsule networks to compensate for the loss of previous routing algorithms. Aside from accuracy, we also find that TreeCaps is the most robust to withstand those semantic-preserving program transformations that change code syntax without modifying the semantics. Evaluated on a large number of Java and C/C++ programs, TreeCaps models outperform prior deep learning models of program source code, in terms of both accuracy and robustness for program comprehension tasks such as code functionality classification and function name prediction

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge