TransSleep: Transitioning-aware Attention-based Deep Neural Network for Sleep Staging

Paper and Code

Mar 22, 2022

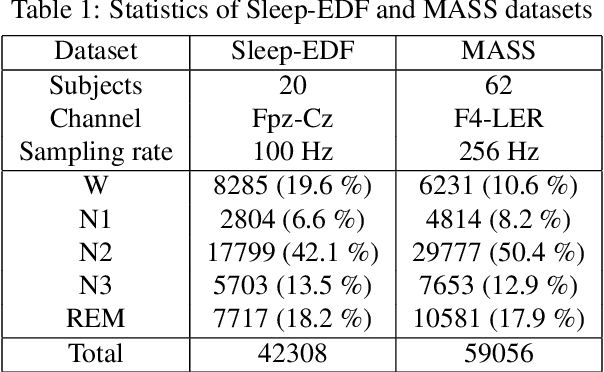

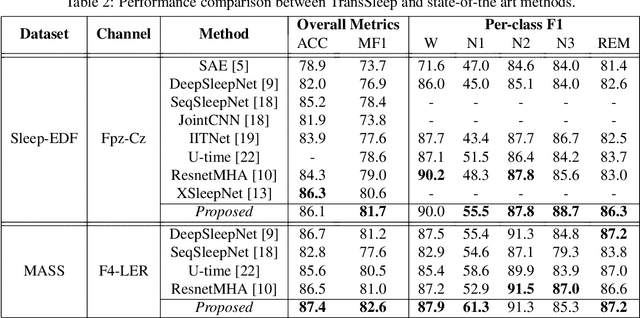

Sleep staging is essential for sleep assessment and plays a vital role as a health indicator. Many recent studies have devised various machine learning as well as deep learning architectures for sleep staging. However, two key challenges hinder the practical use of these architectures: effectively capturing salient waveforms in sleep signals and correctly classifying confusing stages in transitioning epochs. In this study, we propose a novel deep neural network structure, TransSleep, that captures distinctive local temporal patterns and distinguishes confusing stages using two auxiliary tasks. In particular, TransSleep adopts an attention-based multi-scale feature extractor module to capture salient waveforms; a stage-confusion estimator module with a novel auxiliary task, epoch-level stage classification, to estimate confidence scores for identifying confusing stages; and a context encoder module with the other novel auxiliary task, stage-transition detection, to represent contextual relationships across neighboring epochs. Results show that TransSleep achieves promising performance in automatic sleep staging. The validity of TransSleep is demonstrated by its state-of-the-art performance on two publicly available datasets, Sleep-EDF and MASS. Furthermore, we performed ablations to analyze our results from different perspectives. Based on our overall results, we believe that TransSleep has immense potential to provide new insights into deep learning-based sleep staging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge