Transformers to Predict the Applicability of Symbolic Integration Routines

Paper and Code

Oct 31, 2024

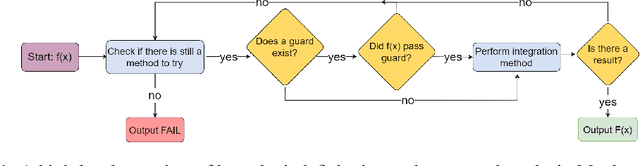

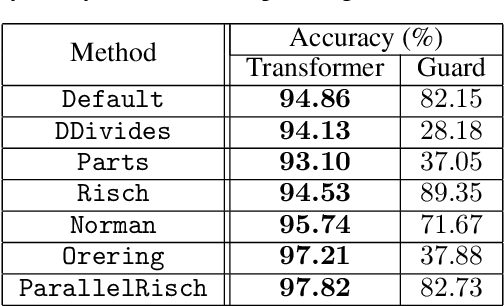

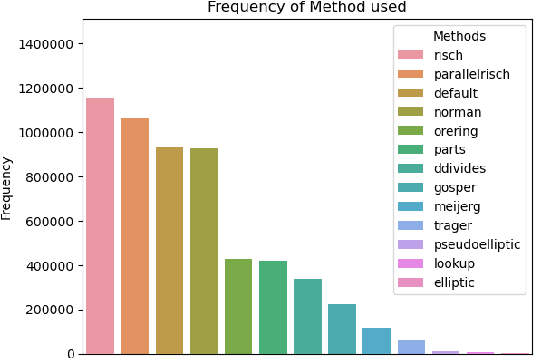

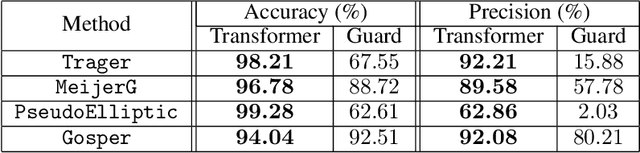

Symbolic integration is a fundamental problem in mathematics: we consider how machine learning may be used to optimise this task in a Computer Algebra System (CAS). We train transformers that predict whether a particular integration method will be successful, and compare against the existing human-made heuristics (called guards) that perform this task in a leading CAS. We find the transformer can outperform these guards, gaining up to 30% accuracy and 70% precision. We further show that the inference time of the transformer is inconsequential which shows that it is well-suited to include as a guard in a CAS. Furthermore, we use Layer Integrated Gradients to interpret the decisions that the transformer is making. If guided by a subject-matter expert, the technique can explain some of the predictions based on the input tokens, which can lead to further optimisations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge