Transformer-CNN: Fast and Reliable tool for QSAR

Paper and Code

Oct 21, 2019

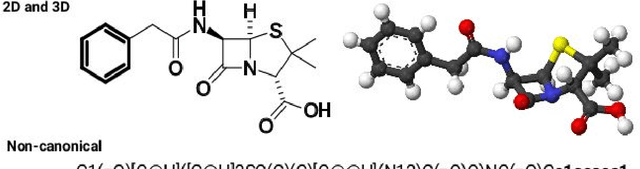

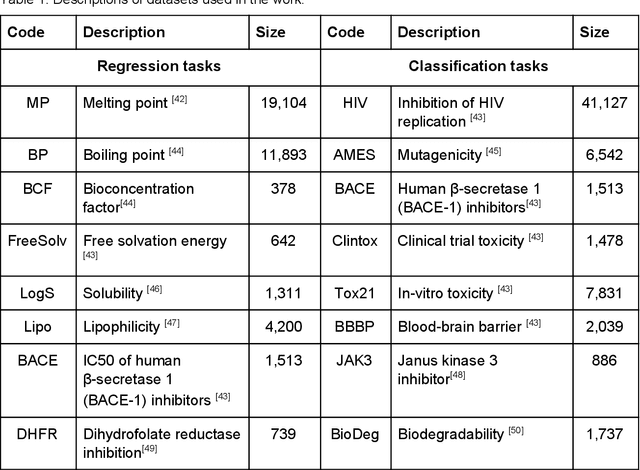

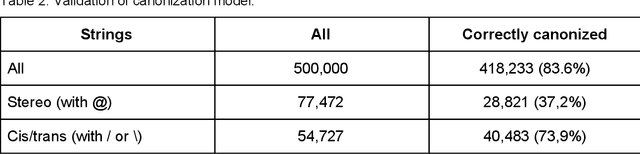

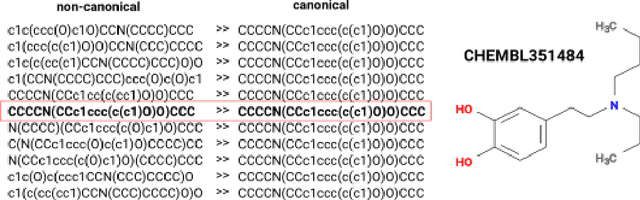

We present SMILES-embeddings derived from internal encoder state of a Transformer[1] model trained to canonize SMILES as a Seq2Seq problem. Using CharNN[2] architecture upon the embeddings results in a higher quality QSAR/QSPR models on diverse benchmark datasets including regression and classification tasks. The proposed Transformer-CNN method uses SMILES augmentation for training and inference, and thus the prognosis grounds on an internal consensus. Both the augmentation and transfer learning based on embedding allows the method to provide good results for small datasets. We discuss the reasons for such effectiveness and draft future directions for the development of the method. The source code and the embeddings are available on https://github.com/bigchem/transformer-cnn, whereas the OCHEM[3] environment (https://ochem.eu) hosts its on-line implementation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge