Transformation-Invariant Network for Few-Shot Object Detection in Remote Sensing Images

Paper and Code

Mar 13, 2023

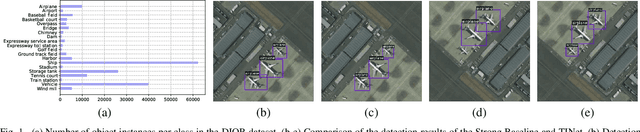

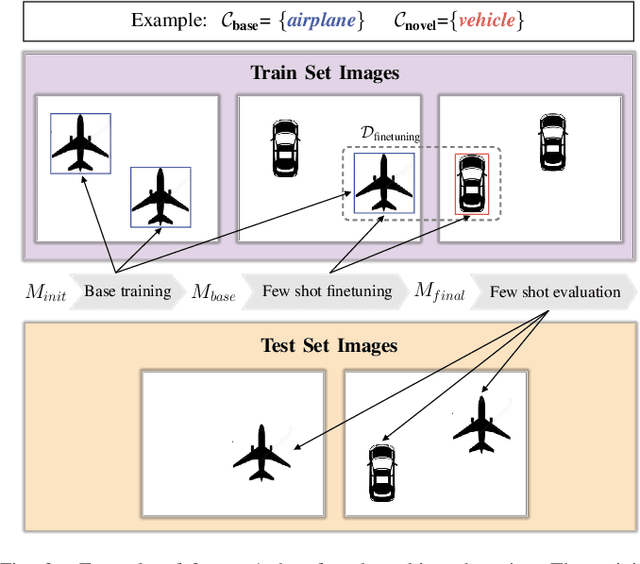

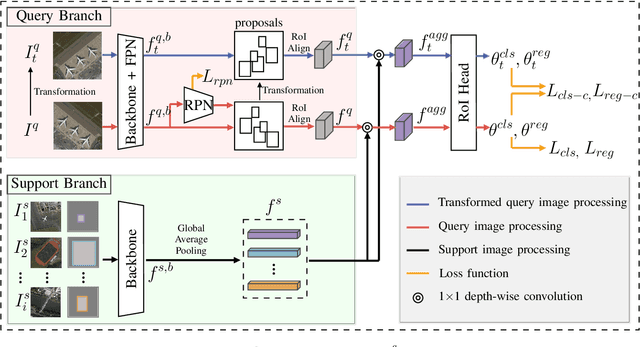

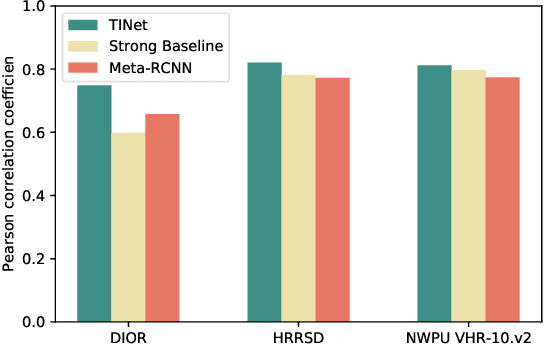

Object detection in remote sensing images relies on a large amount of labeled data for training. The growing new categories and class imbalance render exhaustive annotation non-scalable. Few-shot object detection~(FSOD) tackles this issue by meta-learning on seen base classes and then fine-tuning on novel classes with few labeled samples. However, the object's scale and orientation variations are particularly large in remote sensing images, thus posing challenges to existing few-shot object detection methods. To tackle these challenges, we first propose to integrate a feature pyramid network and use prototype features to highlight query features to improve upon existing FSOD methods. We refer to the modified FSOD as a Strong Baseline which is demonstrated to perform significantly better than the original baselines. To improve the robustness of orientation variation, we further propose a transformation-invariant network (TINet) to allow the network to be invariant to geometric transformations. Extensive experiments on three widely used remote sensing object detection datasets, i.e., NWPU VHR-10.v2, DIOR, and HRRSD demonstrated the effectiveness of the proposed method. Finally, we reproduced multiple FSOD methods for remote sensing images to create an extensive benchmark for follow-up works.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge