Training and Validating a Treatment Recommender with Partial Verification Evidence

Paper and Code

Jun 10, 2024

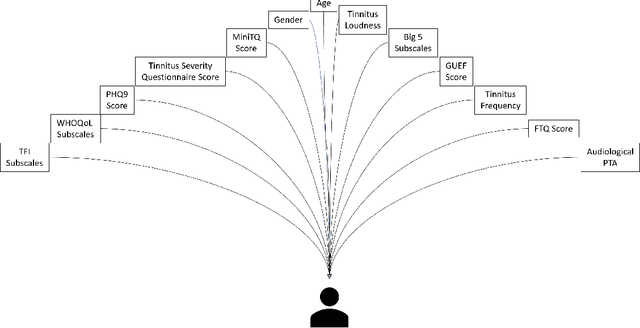

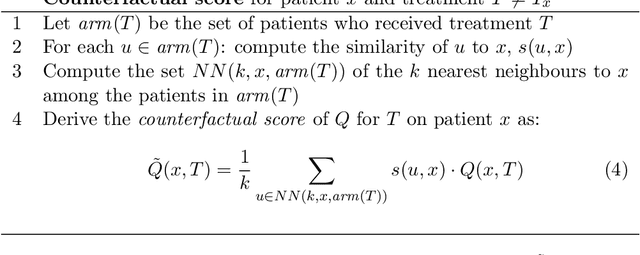

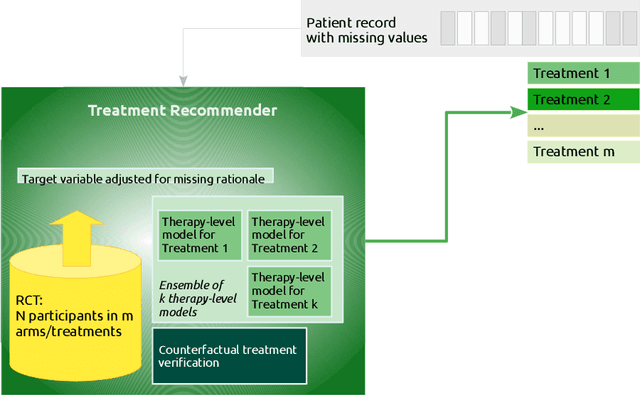

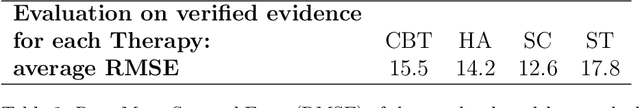

Current clinical decision support systems (DSS) are trained and validated on observational data from the target clinic. This is problematic for treatments validated in a randomized clinical trial (RCT), but not yet introduced in any clinic. In this work, we report on a method for training and validating the DSS using the RCT data. The key challenges we address are of missingness -- missing rationale for treatment assignment (the assignment is at random), and missing verification evidence, since the effectiveness of a treatment for a patient can only be verified (ground truth) for treatments what were actually assigned to a patient. We use data from a multi-armed RCT that investigated the effectiveness of single- and combination- treatments for 240+ tinnitus patients recruited and treated in 5 clinical centers. To deal with the 'missing rationale' challenge, we re-model the target variable (outcome) in order to suppress the effect of the randomly-assigned treatment, and control on the effect of treatment in general. Our methods are also robust to missing values in features and with a small number of patients per RCT arm. We deal with 'missing verification evidence' by using counterfactual treatment verification, which compares the effectiveness of the DSS recommendations to the effectiveness of the RCT assignments when they are aligned v/s not aligned. We demonstrate that our approach leverages the RCT data for learning and verification, by showing that the DSS suggests treatments that improve the outcome. The results are limited through the small number of patients per treatment; while our ensemble is designed to mitigate this effect, the predictive performance of the methods is affected by the smallness of the data. We provide a basis for the establishment of decision supporting routines on treatments that have been tested in RCTs but have not yet been deployed clinically.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge