Towards Standardizing Reinforcement Learning Approaches for Stochastic Production Scheduling

Paper and Code

Apr 16, 2021

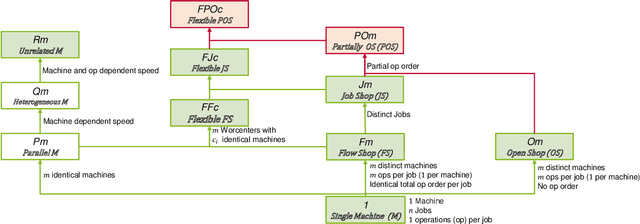

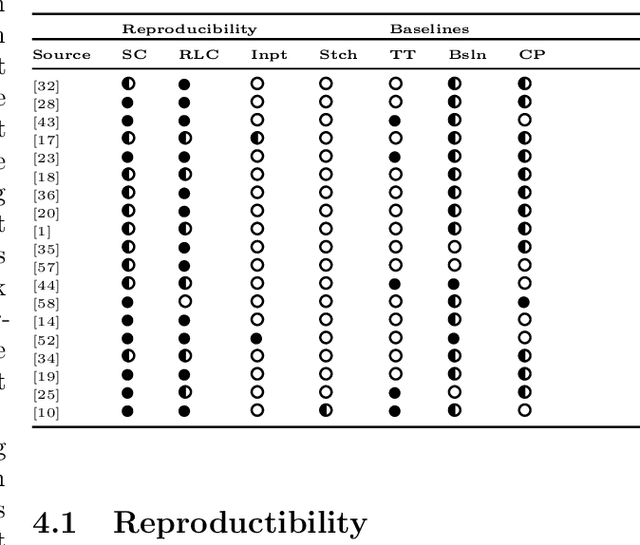

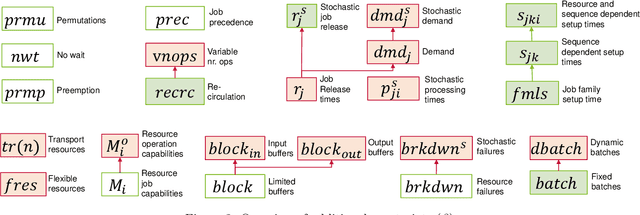

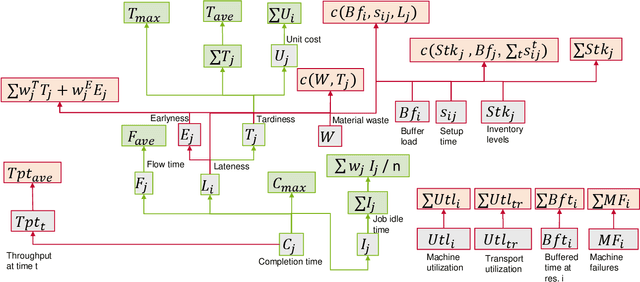

Recent years have seen a rise in interest in terms of using machine learning, particularly reinforcement learning (RL), for production scheduling problems of varying degrees of complexity. The general approach is to break down the scheduling problem into a Markov Decision Process (MDP), whereupon a simulation implementing the MDP is used to train an RL agent. Since existing studies rely on (sometimes) complex simulations for which the code is unavailable, the experiments presented are hard, or, in the case of stochastic environments, impossible to reproduce accurately. Furthermore, there is a vast array of RL designs to choose from. To make RL methods widely applicable in production scheduling and work out their strength for the industry, the standardization of model descriptions - both production setup and RL design - and validation scheme are a prerequisite. Our contribution is threefold: First, we standardize the description of production setups used in RL studies based on established nomenclature. Secondly, we classify RL design choices from existing publications. Lastly, we propose recommendations for a validation scheme focusing on reproducibility and sufficient benchmarking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge