Towards Responsible AI for Financial Transactions

Paper and Code

Jun 06, 2022

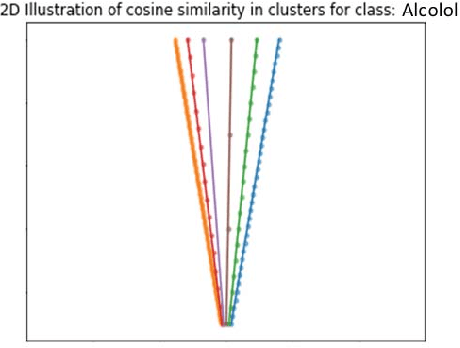

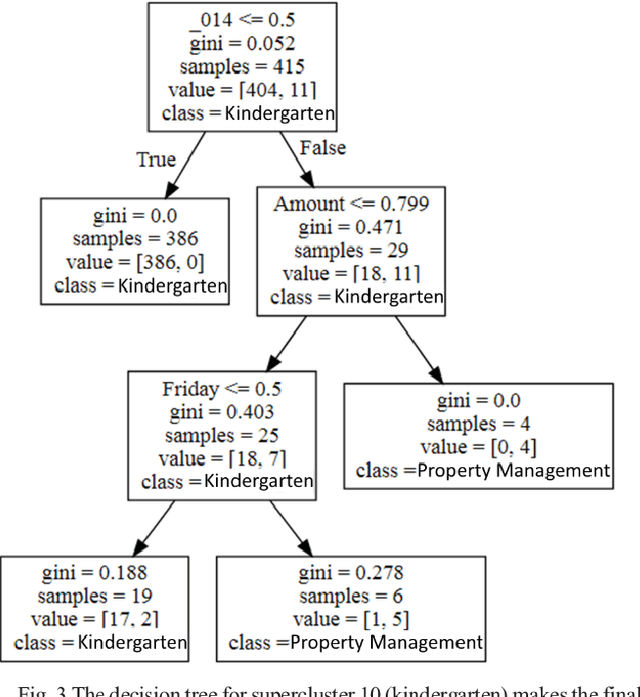

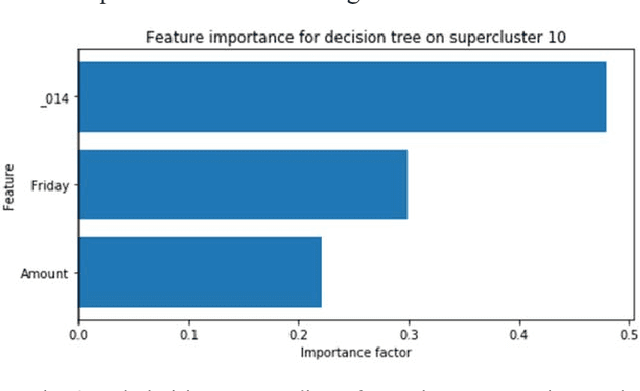

The application of AI in finance is increasingly dependent on the principles of responsible AI. These principles - explainability, fairness, privacy, accountability, transparency and soundness form the basis for trust in future AI systems. In this study, we address the first principle by providing an explanation for a deep neural network that is trained on a mixture of numerical, categorical and textual inputs for financial transaction classification. The explanation is achieved through (1) a feature importance analysis using Shapley additive explanations (SHAP) and (2) a hybrid approach of text clustering and decision tree classifiers. We then test the robustness of the model by exposing it to a targeted evasion attack, leveraging the knowledge we gained about the model through the extracted explanation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge