Towards interpreting ML-based automated malware detection models: a survey

Paper and Code

Jan 15, 2021

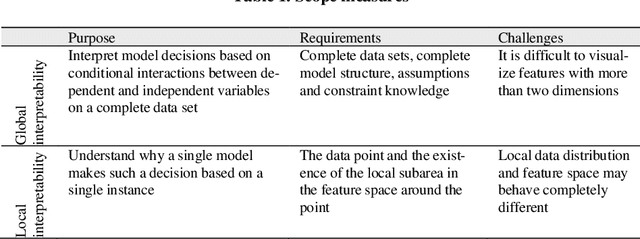

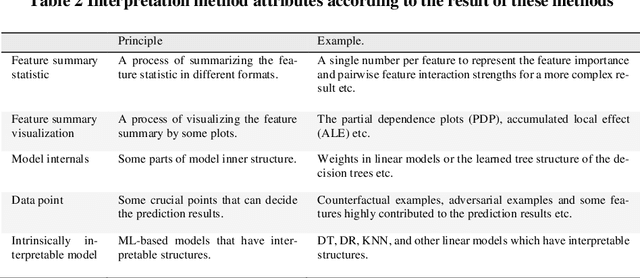

Malware is being increasingly threatening and malware detectors based on traditional signature-based analysis are no longer suitable for current malware detection. Recently, the models based on machine learning (ML) are developed for predicting unknown malware variants and saving human strength. However, most of the existing ML models are black-box, which made their pre-diction results undependable, and therefore need further interpretation in order to be effectively deployed in the wild. This paper aims to examine and categorize the existing researches on ML-based malware detector interpretability. We first give a detailed comparison over the previous work on common ML model inter-pretability in groups after introducing the principles, attributes, evaluation indi-cators and taxonomy of common ML interpretability. Then we investigate the interpretation methods towards malware detection, by addressing the importance of interpreting malware detectors, challenges faced by this field, solutions for migitating these challenges, and a new taxonomy for classifying all the state-of-the-art malware detection interpretability work in recent years. The highlight of our survey is providing a new taxonomy towards malware detection interpreta-tion methods based on the common taxonomy summarized by previous re-searches in the common field. In addition, we are the first to evaluate the state-of-the-art approaches by interpretation method attributes to generate the final score so as to give insight to quantifying the interpretability. By concluding the results of the recent researches, we hope our work can provide suggestions for researchers who are interested in the interpretability on ML-based malware de-tection models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge