Towards Interpretable Ensemble Learning for Image-based Malware Detection

Paper and Code

Jan 13, 2021

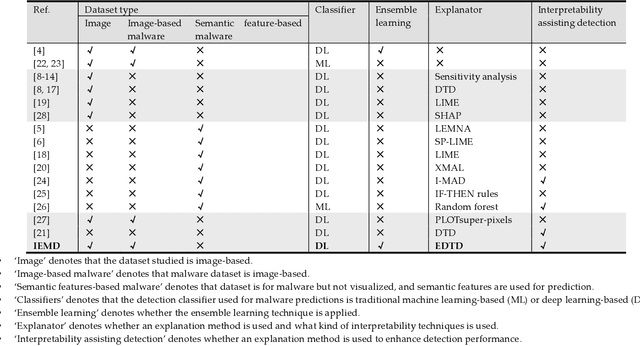

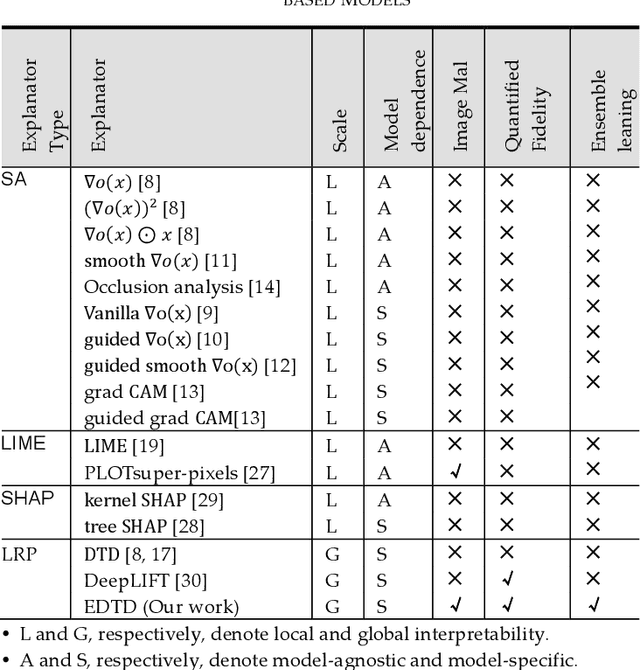

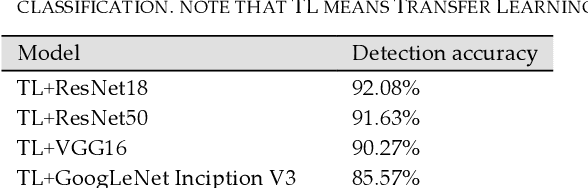

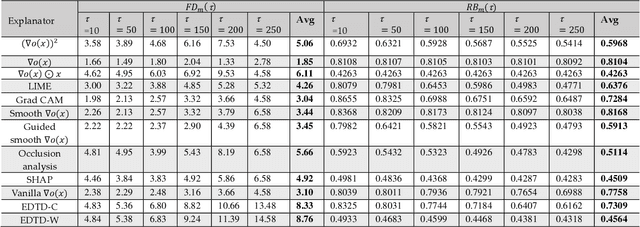

Deep learning (DL) models for image-based malware detection have exhibited their capability in producing high prediction accuracy. But model interpretability is posing challenges to their widespread application in security and safety-critical application domains. This paper aims for designing an Interpretable Ensemble learning approach for image-based Malware Detection (IEMD). We first propose a Selective Deep Ensemble Learning-based (SDEL) detector and then design an Ensemble Deep Taylor Decomposition (EDTD) approach, which can give the pixel-level explanation to SDEL detector outputs. Furthermore, we develop formulas for calculating fidelity, robustness and expressiveness on pixel-level heatmaps in order to assess the quality of EDTD explanation. With EDTD explanation, we develop a novel Interpretable Dropout approach (IDrop), which establishes IEMD by training SDEL detector. Experiment results exhibit the better explanation of our EDTD than the previous explanation methods for image-based malware detection. Besides, experiment results indicate that IEMD achieves a higher detection accuracy up to 99.87% while exhibiting interpretability with high quality of prediction results. Moreover, experiment results indicate that IEMD interpretability increases with the increasing detection accuracy during the construction of IEMD. This consistency suggests that IDrop can mitigate the tradeoff between model interpretability and detection accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge