Towards Improving the Trustworthiness of Hardware based Malware Detector using Online Uncertainty Estimation

Paper and Code

Mar 21, 2021

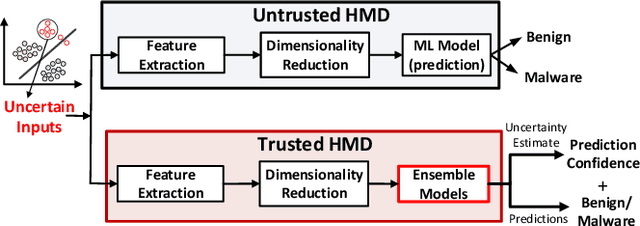

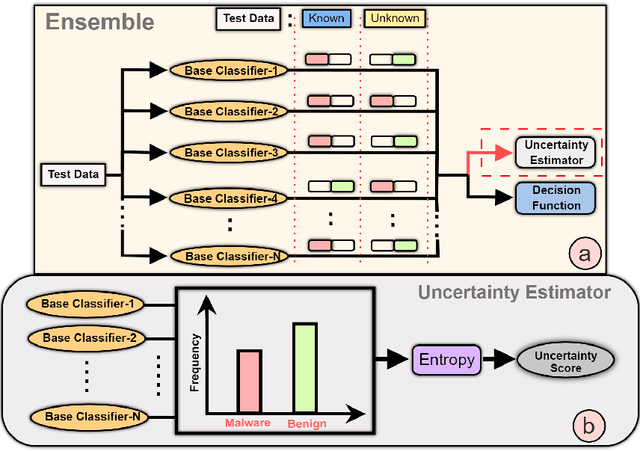

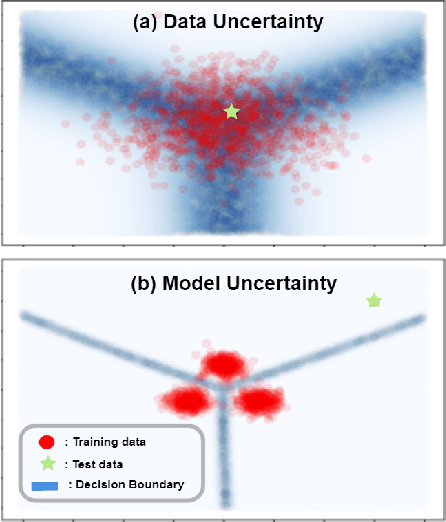

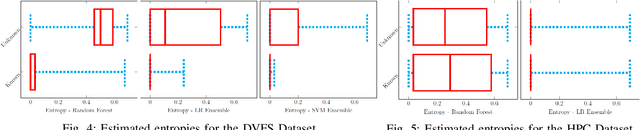

Hardware-based Malware Detectors (HMDs) using Machine Learning (ML) models have shown promise in detecting malicious workloads. However, the conventional black-box based machine learning (ML) approach used in these HMDs fail to address the uncertain predictions, including those made on zero-day malware. The ML models used in HMDs are agnostic to the uncertainty that determines whether the model "knows what it knows," severely undermining its trustworthiness. We propose an ensemble-based approach that quantifies uncertainty in predictions made by ML models of an HMD, when it encounters an unknown workload than the ones it was trained on. We test our approach on two different HMDs that have been proposed in the literature. We show that the proposed uncertainty estimator can detect >90% of unknown workloads for the Power-management based HMD, and conclude that the overlapping benign and malware classes undermine the trustworthiness of the Performance Counter-based HMD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge