Towards Generalized and Explainable Long-Range Context Representation for Dialogue Systems

Paper and Code

Oct 12, 2022

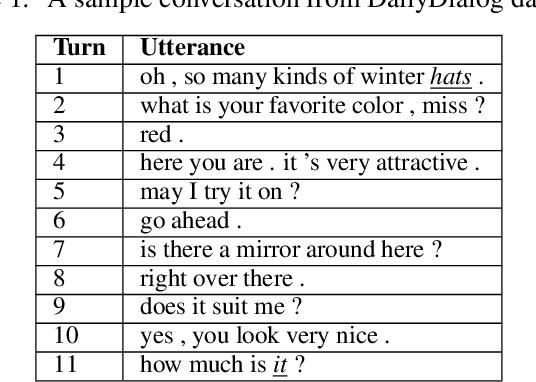

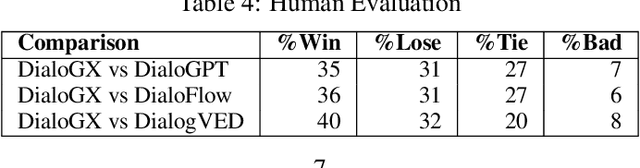

Context representation is crucial to both dialogue understanding and generation. Recently, the most popular method for dialog context representation is to concatenate the last-$k$ previous utterances as context and use a large transformer-based model to generate the next response. However, this method may not be ideal for conversations containing long-range dependencies. In this work, we propose DialoGX, a novel encoder-decoder based framework for conversational response generation with a generalized and explainable context representation that can look beyond the last-$k$ utterances. Hence the method is adaptive to conversations with long-range dependencies. Our proposed solution is based on two key ideas: a) computing a dynamic representation of the entire context, and b) finding the previous utterances that are relevant for generating the next response. Instead of last-$k$ utterances, DialoGX uses the concatenation of the dynamic context vector and encoding of the most relevant utterances as input which enables it to represent conversations of any length in a compact and generalized fashion. We conduct our experiments on DailyDialog, a popular open-domain chit-chat dataset. DialoGX achieves comparable performance with the state-of-the-art models on the automated metrics. We also justify our context representation through the lens of psycholinguistics and show that the relevance score of previous utterances agrees well with human cognition which makes DialoGX explainable as well.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge