Towards Cross-Cultural Analysis using Music Information Dynamics

Paper and Code

Nov 24, 2021

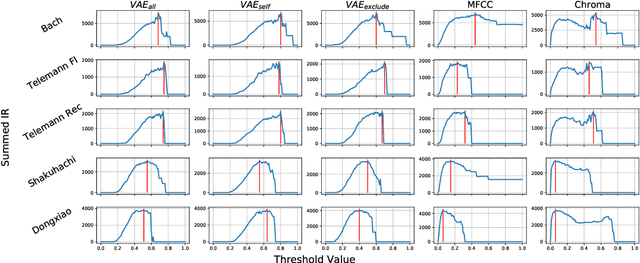

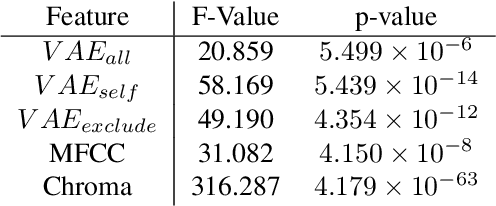

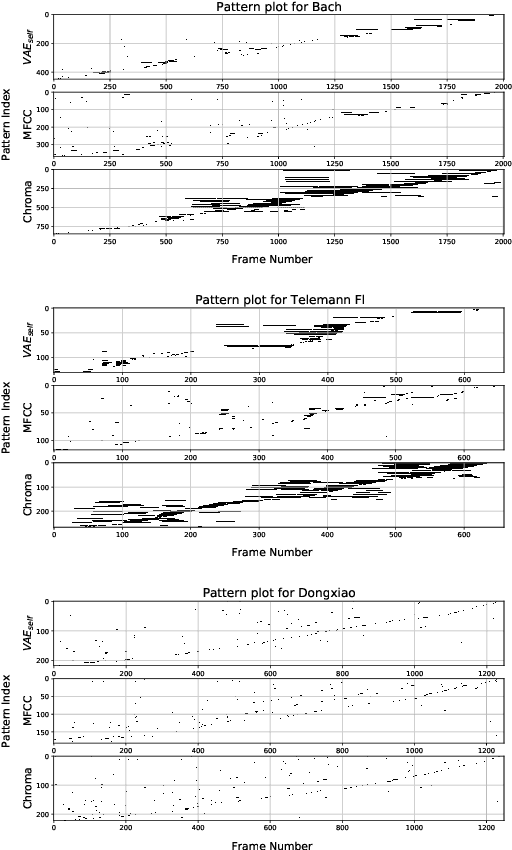

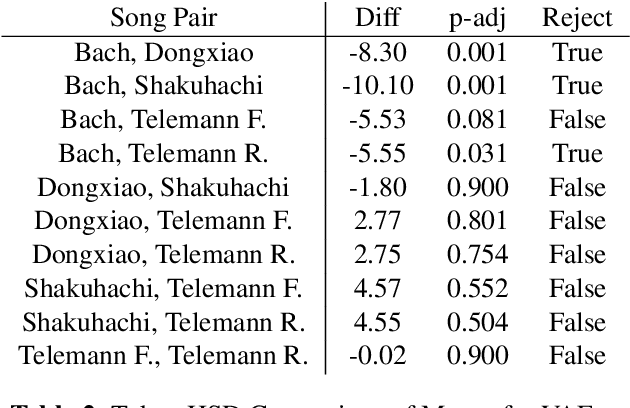

A music piece is both comprehended hierarchically, from sonic events to melodies, and sequentially, in the form of repetition and variation. Music from different cultures establish different aesthetics by having different style conventions on these two aspects. We propose a framework that could be used to quantitatively compare music from different cultures by looking at these two aspects. The framework is based on an Music Information Dynamics model, a Variable Markov Oracle (VMO), and is extended with a variational representation learning of audio. A variational autoencoder (VAE) is trained to map audio fragments into a latent representation. The latent representation is fed into a VMO. The VMO then learns a clustering of the latent representation via a threshold that maximizes the information rate of the quantized latent representation sequence. This threshold effectively controls the sensibility of the predictive step to acoustic changes, which determines the framework's ability to track repetitions on longer time scales. This approach allows characterization of the overall information contents of a musical signal at each level of acoustic sensibility. Our findings under this framework show that sensibility to subtle acoustic changes is higher for East-Asian musical traditions, while the Western works exhibit longer motivic structures at higher thresholds of differences in the latent space. This suggests that a profile of information contents, analyzed as a function of the level of acoustic detail can serve as a possible cultural characteristic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge