Towards a Decentralized, Autonomous Multiagent Framework for Mitigating Crop Loss

Paper and Code

Jan 07, 2019

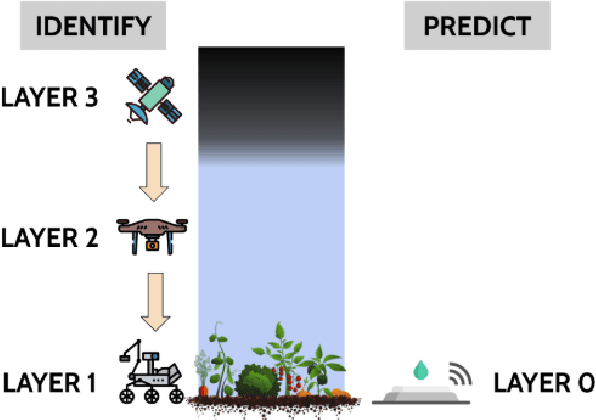

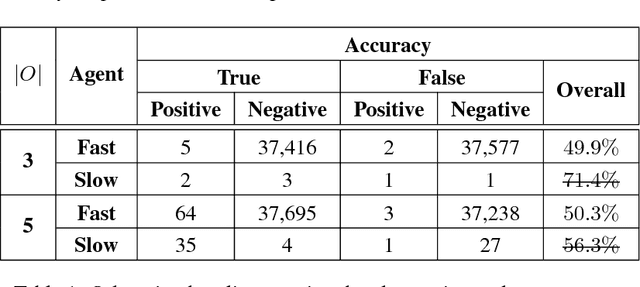

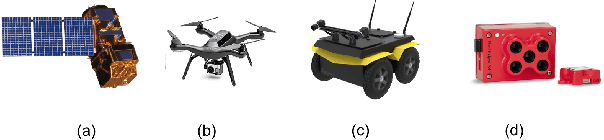

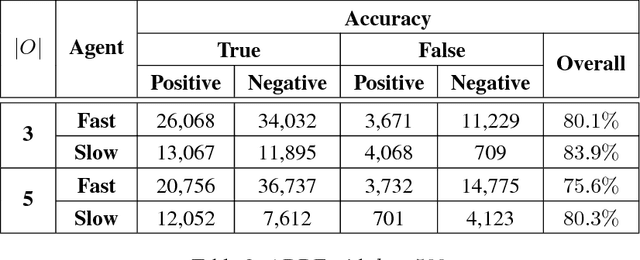

We propose a generalized decision-theoretic system for a heterogeneous team of autonomous agents who are tasked with online identification of phenotypically expressed stress in crop fields.. This system employs four distinct types of agents, specific to four available sensor modalities: satellites (Layer 3), uninhabited aerial vehicles (L2), uninhabited ground vehicles (L1), and static ground-level sensors (L0). Layers 3, 2, and 1 are tasked with performing image processing at the available resolution of the sensor modality and, along with data generated by layer 0 sensors, identify erroneous differences that arise over time. Our goal is to limit the use of the more computationally and temporally expensive subsequent layers. Therefore, from layer 3 to 1, each layer only investigates areas that previous layers have identified as potentially afflicted by stress. We introduce a reinforcement learning technique based on Perkins' Monte Carlo Exploring Starts for a generalized Markovian model for each layer's decision problem, and label the system the Agricultural Distributed Decision Framework (ADDF). As our domain is real-world and online, we illustrate implementations of the two major components of our system: a clustering-based image processing methodology and a two-layer POMDP implementation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge