Toward Automated Classroom Observation: Multimodal Machine Learning to Estimate CLASS Positive Climate and Negative Climate

Paper and Code

May 19, 2020

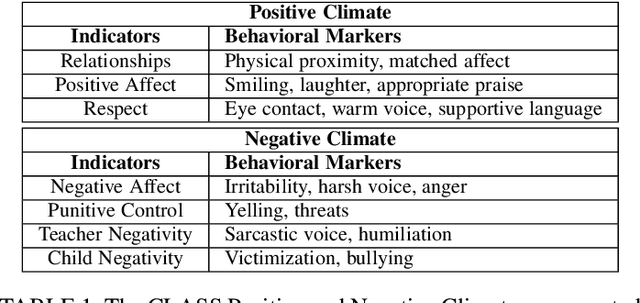

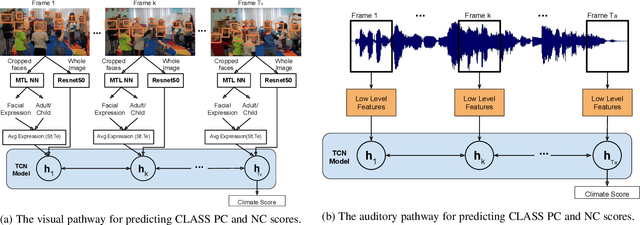

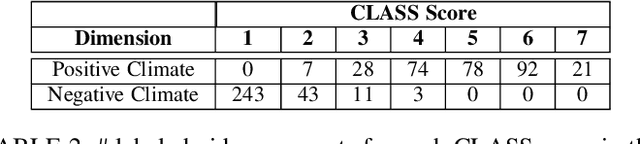

In this work we present a multi-modal machine learning-based system, which we call ACORN, to analyze videos of school classrooms for the Positive Climate (PC) and Negative Climate (NC) dimensions of the CLASS observation protocol that is widely used in educational research. ACORN uses convolutional neural networks to analyze spectral audio features, the faces of teachers and students, and the pixels of each image frame, and then integrates this information over time using Temporal Convolutional Networks. The audiovisual ACORN's PC and NC predictions have Pearson correlations of $0.55$ and $0.63$ with ground-truth scores provided by expert CLASS coders on the UVA Toddler dataset (cross-validation on $n=300$ 15-min video segments), and a purely auditory ACORN predicts PC and NC with correlations of $0.36$ and $0.41$ on the MET dataset (test set of $n=2000$ videos segments). These numbers are similar to inter-coder reliability of human coders. Finally, using Graph Convolutional Networks we make early strides (AUC=$0.70$) toward predicting the specific moments (45-90sec clips) when the PC is particularly weak/strong. Our findings inform the design of automatic classroom observation and also more general video activity recognition and summary recognition systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge