Toward a Framework for Adaptive ImpedancenControl of an Upper-limb Prosthesis

Paper and Code

Sep 11, 2022

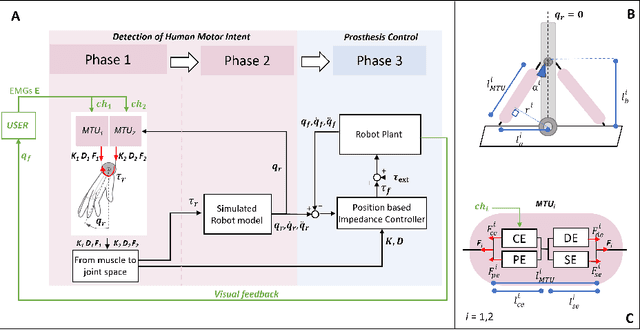

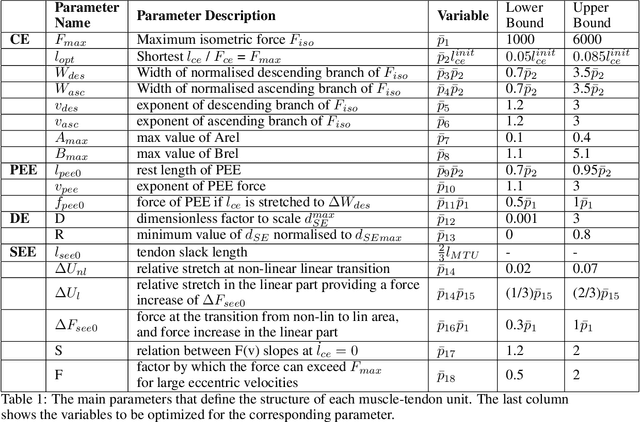

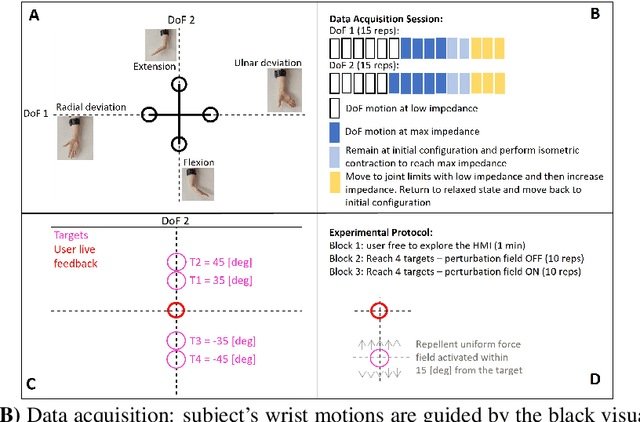

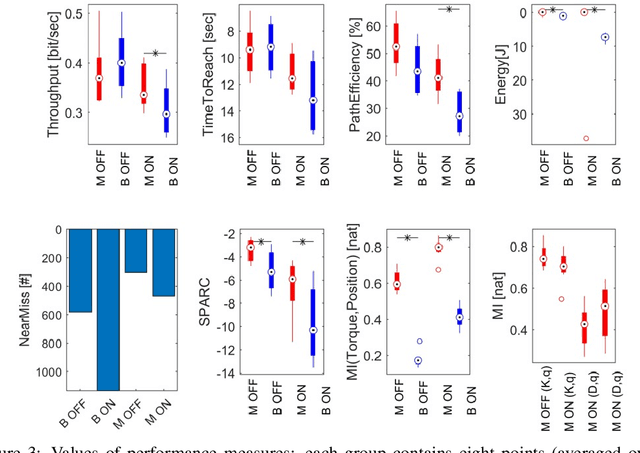

This paper describes a novel framework for a human-machine interface that can be used to control an upper-limb prosthesis. The objective is to estimate the human's motor intent from noisy surface electromyography signals and to execute the motor intent on the prosthesis (i.e., the robot) even in the presence of previously unseen perturbations. The framework includes muscle-tendon models for each degree of freedom, a method for learning the parameter values of models used to estimate the user's motor intent, and a variable impedance controller that uses the stiffness and damping values obtained from the muscle models to adapt the prosthesis' motion trajectory and dynamics. We experimentally evaluate our framework in the context of able-bodied humans using a simulated version of the human-machine interface to perform reaching tasks that primarily actuate one degree of freedom in the wrist, and consider external perturbations in the form of a uniform force field that pushes the wrist away from the target. We demonstrate that our framework provides the desired adaptive performance, and substantially improves performance in comparison with a data-driven baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge