Top-N: Equivariant set and graph generation without exchangeability

Paper and Code

Oct 05, 2021

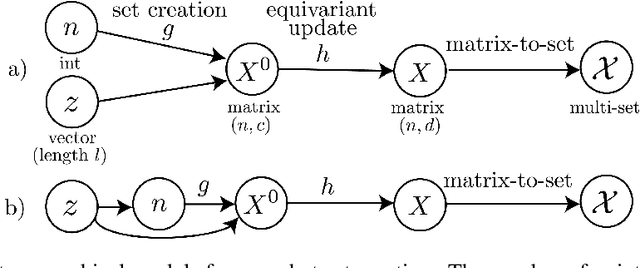

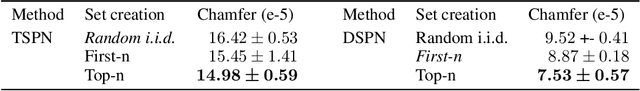

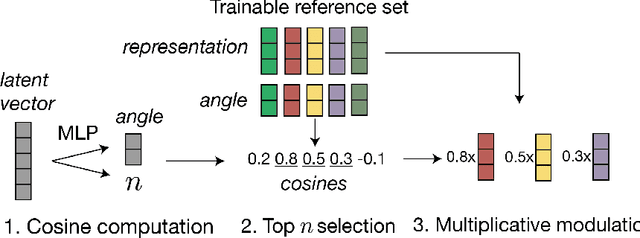

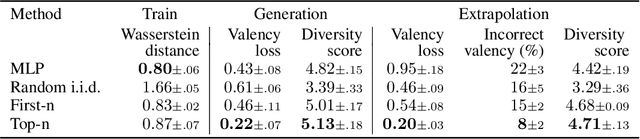

We consider one-shot probabilistic decoders that map a vector-shaped prior to a distribution over sets or graphs. These functions can be integrated into variational autoencoders (VAE), generative adversarial networks (GAN) or normalizing flows, and have important applications in drug discovery. Set and graph generation is most commonly performed by generating points (and sometimes edge weights) i.i.d. from a normal distribution, and processing them along with the prior vector using Transformer layers or graph neural networks. This architecture is designed to generate exchangeable distributions (all permutations of a set are equally likely) but it is hard to train due to the stochasticity of i.i.d. generation. We propose a new definition of equivariance and show that exchangeability is in fact unnecessary in VAEs and GANs. We then introduce Top-n, a deterministic, non-exchangeable set creation mechanism which learns to select the most relevant points from a trainable reference set. Top-n can replace i.i.d. generation in any VAE or GAN -- it is easier to train and better captures complex dependencies in the data. Top-n outperforms i.i.d generation by 15% at SetMNIST reconstruction, generates sets that are 64% closer to the true distribution on a synthetic molecule-like dataset, and is able to generate more diverse molecules when trained on the classical QM9 dataset. With improved foundations in one-shot generation, our algorithm contributes to the design of more effective molecule generation methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge