Top-down and Bottom-up Feature Combination for Multi-sensor Attentive Robots

Paper and Code

Jul 22, 2013

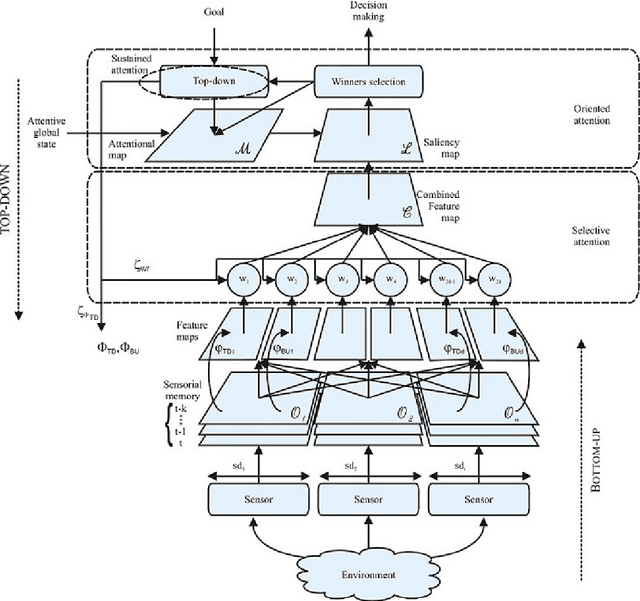

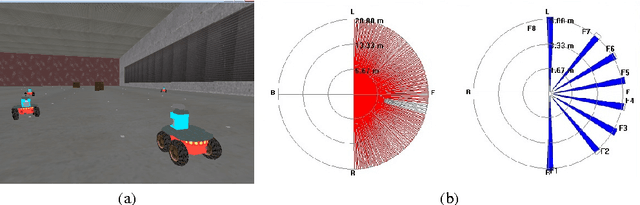

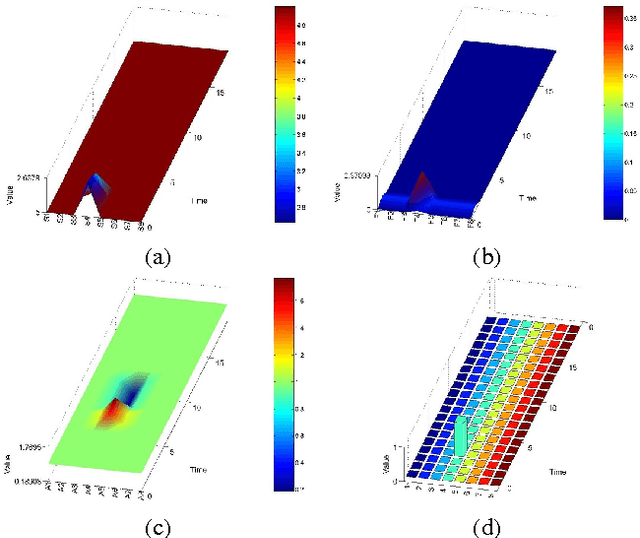

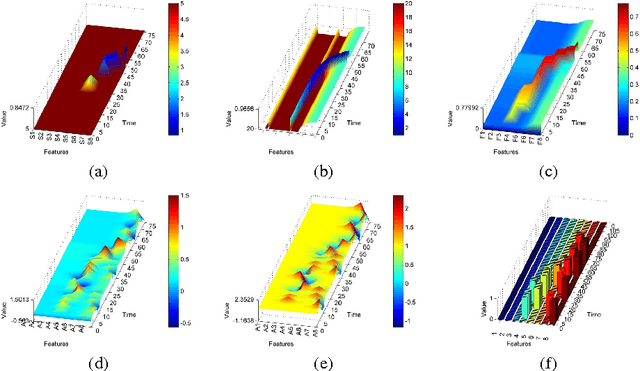

The information available to robots in real tasks is widely distributed both in time and space, requiring the agent to search for relevant data. In humans, that face the same problem when sounds, images and smells are presented to their sensors in a daily scene, a natural system is applied: Attention. As vision plays an important role in our routine, most research regarding attention has involved this sensorial system and the same has been replicated to the robotics field. However,most of the robotics tasks nowadays do not rely only in visual data, that are still costly. To allow the use of attentive concepts with other robotics sensors that are usually used in tasks such as navigation, self-localization, searching and mapping, a generic attentional model has been previously proposed. In this work, feature mapping functions were designed to build feature maps to this attentive model from data from range scanner and sonar sensors. Experiments were performed in a high fidelity simulated robotics environment and results have demonstrated the capability of the model on dealing with both salient stimuli and goal-driven attention over multiple features extracted from multiple sensors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge