The Variational Garrote

Paper and Code

Nov 12, 2012

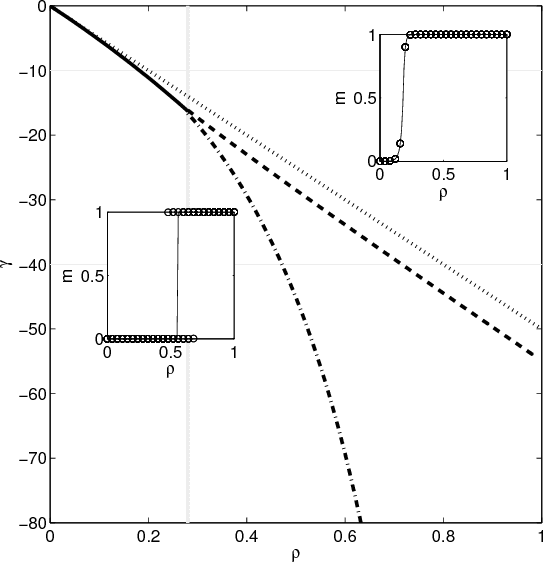

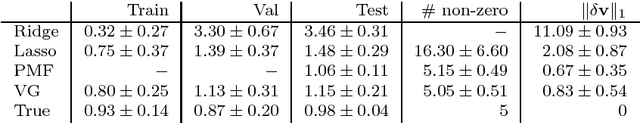

In this paper, we present a new variational method for sparse regression using $L_0$ regularization. The variational parameters appear in the approximate model in a way that is similar to Breiman's Garrote model. We refer to this method as the variational Garrote (VG). We show that the combination of the variational approximation and $L_0$ regularization has the effect of making the problem effectively of maximal rank even when the number of samples is small compared to the number of variables. The VG is compared numerically with the Lasso method, ridge regression and the recently introduced paired mean field method (PMF) (M. Titsias & M. L\'azaro-Gredilla., NIPS 2012). Numerical results show that the VG and PMF yield more accurate predictions and more accurately reconstruct the true model than the other methods. It is shown that the VG finds correct solutions when the Lasso solution is inconsistent due to large input correlations. Globally, VG is significantly faster than PMF and tends to perform better as the problems become denser and in problems with strongly correlated inputs. The naive implementation of the VG scales cubic with the number of features. By introducing Lagrange multipliers we obtain a dual formulation of the problem that scales cubic in the number of samples, but close to linear in the number of features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge