The Twin-System Approach as One Generic Solution for XAI: An Overview of ANN-CBR Twins for Explaining Deep Learning

Paper and Code

May 20, 2019

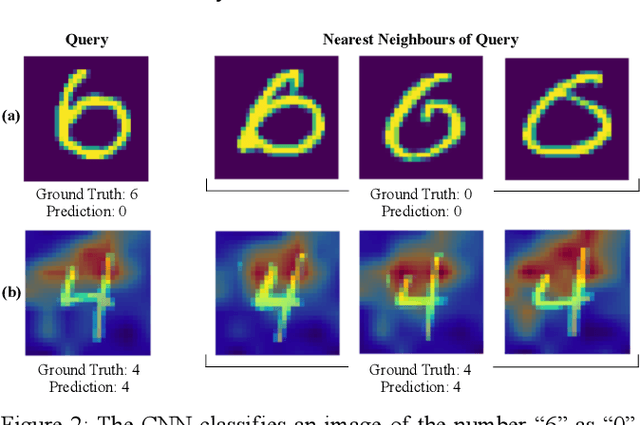

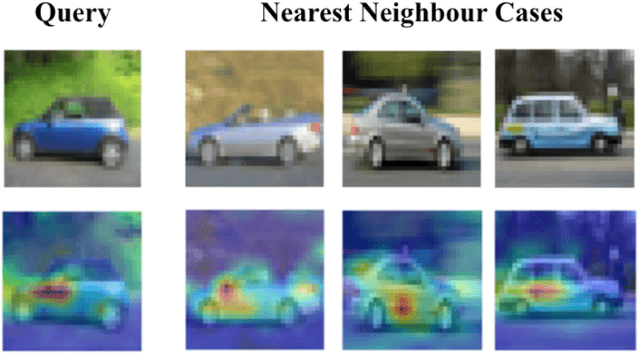

The notion of twin systems is proposed to address the eXplainable AI (XAI) problem, where an uninterpretable black-box system is mapped to a white-box 'twin' that is more interpretable. In this short paper, we overview very recent work that advances a generic solution to the XAI problem, the so called twin system approach. The most popular twinning in the literature is that between an Artificial Neural Networks (ANN ) as a black box and Case Based Reasoning (CBR) system as a white-box, where the latter acts as an interpretable proxy for the former. We outline how recent work reviving this idea has applied it to deep learning methods. Furthermore, we detail the many fruitful directions in which this work may be taken; such as, determining the most (i) accurate feature-weighting methods to be used, (ii) appropriate deployments for explanatory cases, (iii) useful cases of explanatory value to users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge