The Sixth Sense with Artificial Intelligence: An Innovative Solution for Real-Time Retrieval of the Human Figure Behind Visual Obstruction

Paper and Code

Mar 15, 2019

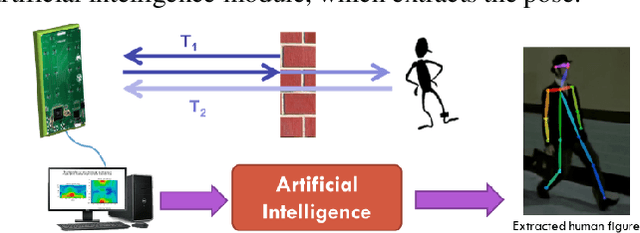

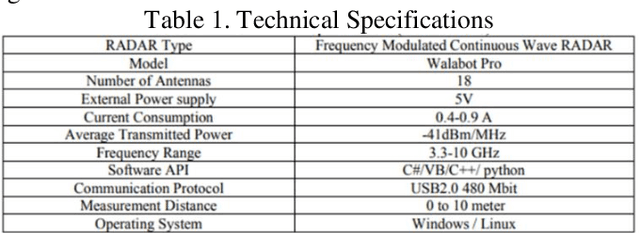

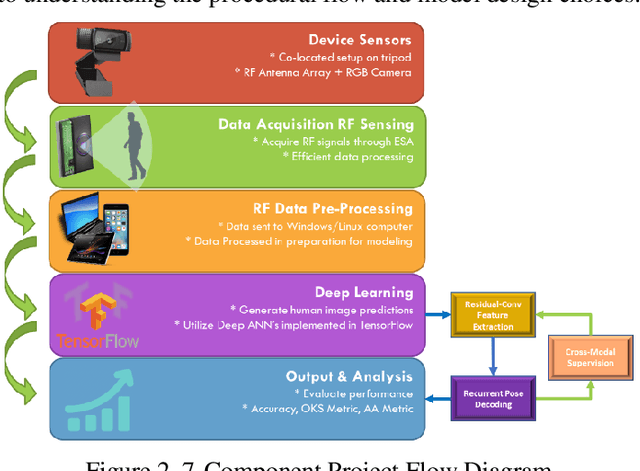

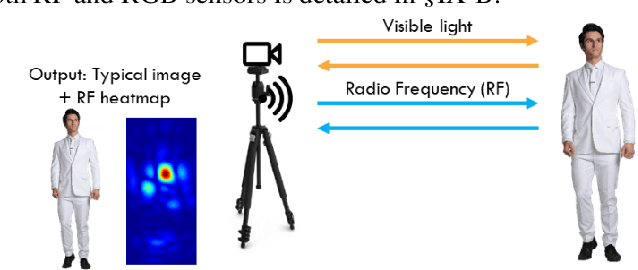

Overcoming the visual barrier and developing "see-through vision" has been one of mankind's long-standing dreams. However, visible light cannot travel through opaque obstructions (e.g. walls). Unlike visible light, though, Radio Frequency (RF) signals penetrate many common building objects and reflect highly off humans. This project creates a breakthrough artificial intelligence methodology by which the skeletal structure of a human can be reconstructed with RF even through visual occlusion. In a novel procedural flow, video and RF data are first collected simultaneously using a co-located setup containing an RGB camera and RF antenna array transceiver. Next, the RGB video is processed with a Part Affinity Field computer-vision model to generate ground truth label locations for each keypoint in the human skeleton. Then, a collective deep-learning model consisting of a Residual Convolutional Neural Network, Region Proposal Network, and Recurrent Neural Network 1) extracts spatial features from RF images, 2) detects and crops out all people present in the scene, and 3) aggregates information over dozens of time-steps to piece together the various limbs that reflect signals back to the receiver at different times. A simulator is created to demonstrate the system. This project has impactful applications in medicine, military, search & rescue, and robotics. Especially during a fire emergency, neither visible light nor infrared thermal imaging can penetrate smoke or fire, but RF can. With over 1 million fires reported in the US per year, this technology could save thousands of lives and tens-of-thousands of injuries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge