The Role of Pre-Training in High-Resolution Remote Sensing Scene Classification

Paper and Code

Nov 05, 2021

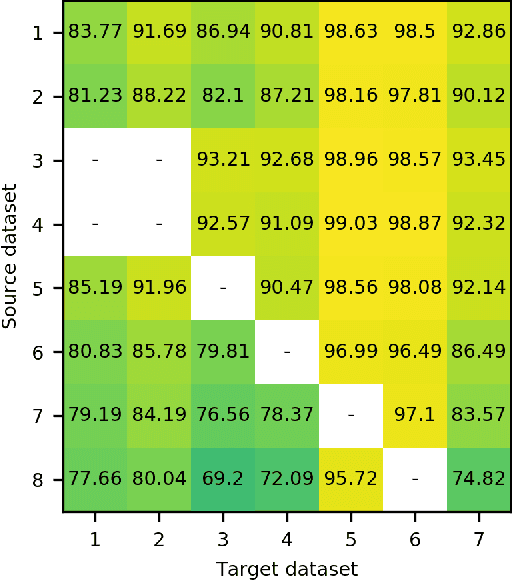

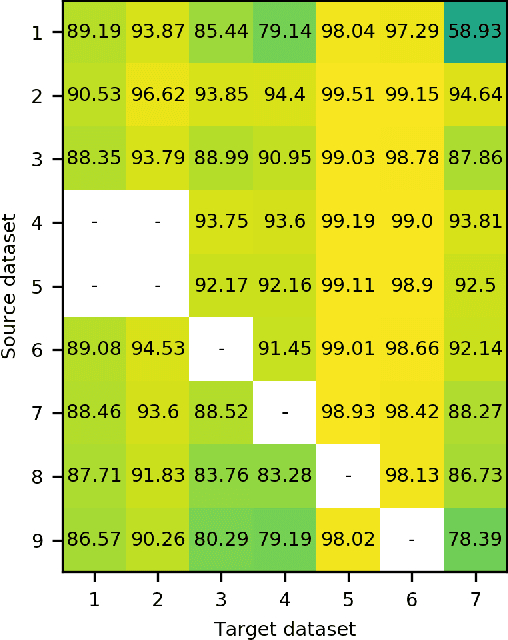

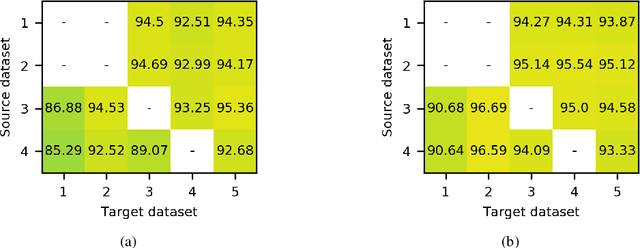

Due to the scarcity of labeled data, using models pre-trained on ImageNet is a de facto standard in remote sensing scene classification. Although, recently, several larger high resolution remote sensing (HRRS) datasets have appeared with a goal of establishing new benchmarks, attempts at training models from scratch on these datasets are sporadic. In this paper, we show that training models from scratch on several newer datasets yields comparable results to fine-tuning the models pre-trained on ImageNet. Furthermore, the representations learned on HRRS datasets transfer to other HRRS scene classification tasks better or at least similarly as those learned on ImageNet. Finally, we show that in many cases the best representations are obtained by using a second round of pre-training using in-domain data, i.e. domain-adaptive pre-training. The source code and pre-trained models are available at \url{https://github.com/risojevicv/RSSC-transfer.}

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge