The Effect of Communication on Noncooperative Multiplayer Multi-Armed Bandit Problems

Paper and Code

Nov 05, 2017

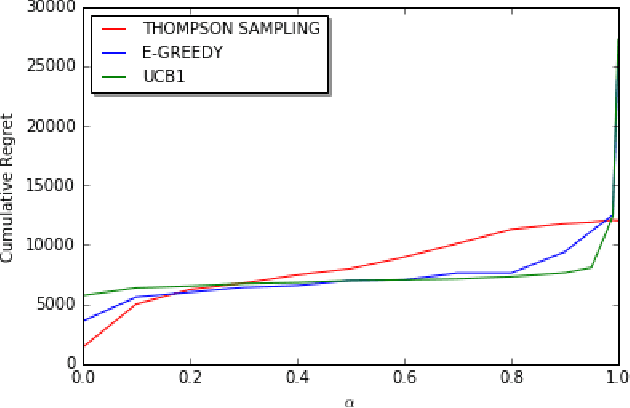

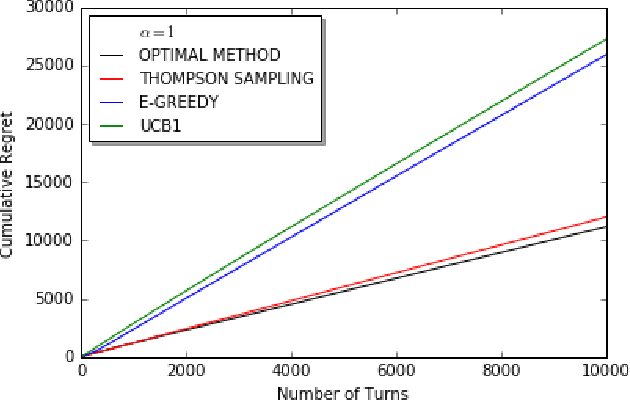

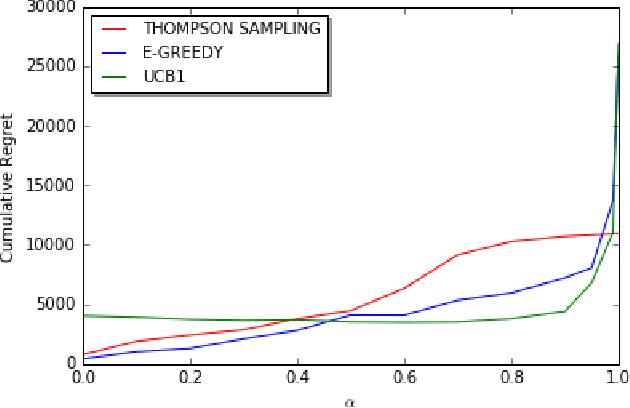

We consider decentralized stochastic multi-armed bandit problem with multiple players in the case of different communication probabilities between players. Each player makes a decision of pulling an arm without cooperation while aiming to maximize his or her reward but informs his or her neighbors in the end of every turn about the arm he or she pulled and the reward he or she got. Neighbors of players are determined according to an Erdos-Renyi graph with which is reproduced in the beginning of every turn. We consider i.i.d. rewards generated by a Bernoulli distribution and assume that players are unaware about the arms' probability distributions and their mean values. In case of a collision, we assume that only one of the players who is randomly chosen gets the reward where the others get zero reward. We study the effects of connectivity, the degree of communication between players, on the cumulative regret using well-known algorithms UCB1, epsilon-Greedy and Thompson Sampling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge