The Consequences of the Framing of Machine Learning Risk Prediction Models: Evaluation of Sepsis in General Wards

Paper and Code

Jan 26, 2021

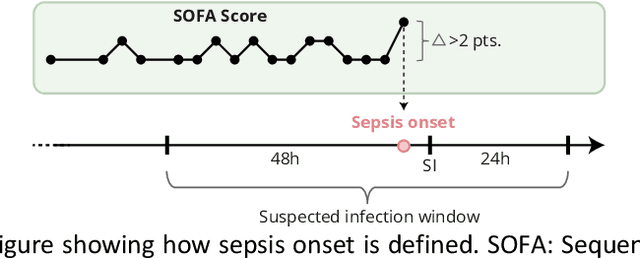

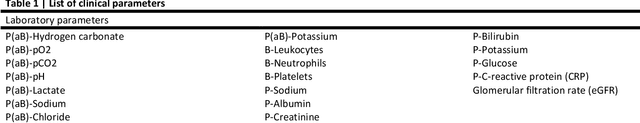

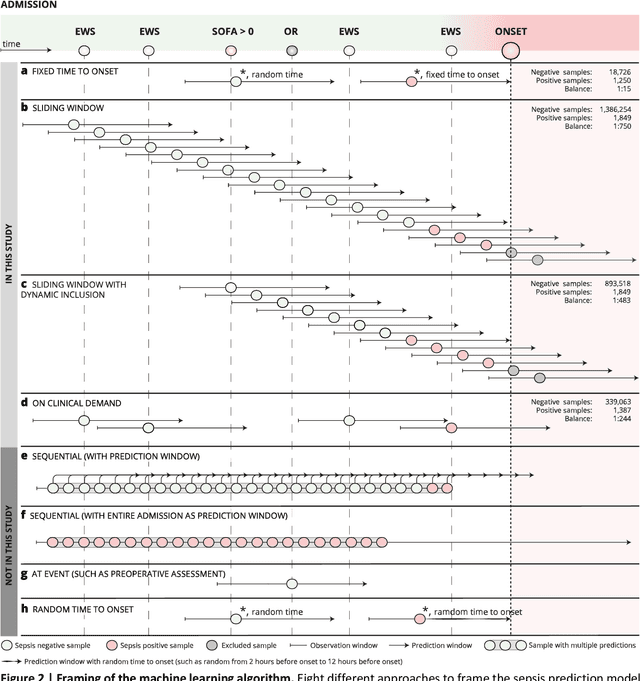

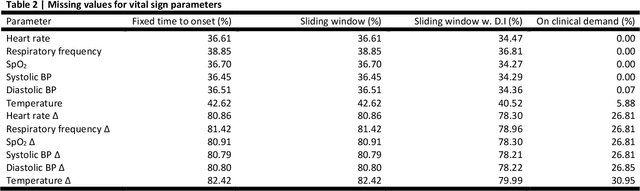

Objectives: To evaluate the consequences of the framing of machine learning risk prediction models. We evaluate how framing affects model performance and model learning in four different approaches previously applied in published artificial-intelligence (AI) models. Setting and participants: We analysed structured secondary healthcare data from 221,283 citizens from four Danish municipalities who were 18 years of age or older. Results: The four models had similar population level performance (a mean area under the receiver operating characteristic curve of 0.73 to 0.82), in contrast to the mean average precision, which varied greatly from 0.007 to 0.385. Correspondingly, the percentage of missing values also varied between framing approaches. The on-clinical-demand framing, which involved samples for each time the clinicians made an early warning score assessment, showed the lowest percentage of missing values among the vital sign parameters, and this model was also able to learn more temporal dependencies than the others. The Shapley additive explanations demonstrated opposing interpretations of SpO2 in the prediction of sepsis as a consequence of differentially framed models. Conclusions: The profound consequences of framing mandate attention from clinicians and AI developers, as the understanding and reporting of framing are pivotal to the successful development and clinical implementation of future AI technology. Model framing must reflect the expected clinical environment. The importance of proper problem framing is by no means exclusive to sepsis prediction and applies to most clinical risk prediction models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge