The connections between Lyapunov functions for some optimization algorithms and differential equations

Paper and Code

Sep 01, 2020

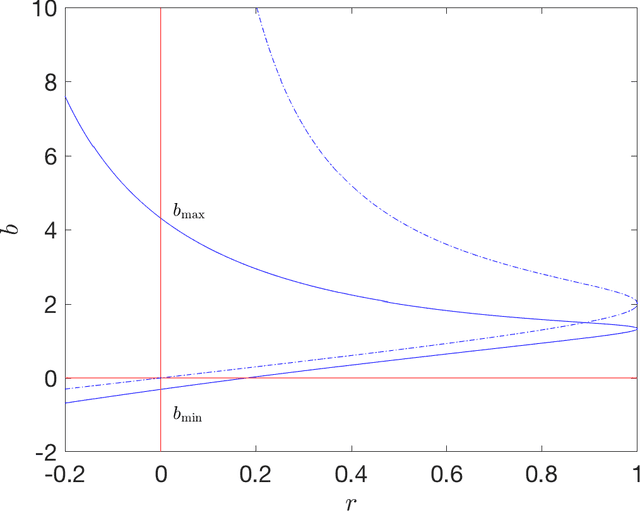

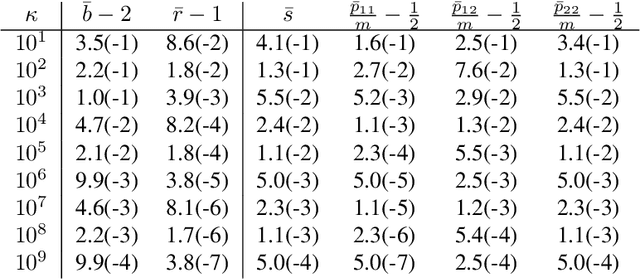

In this manuscript we study the properties of a family of a second order differential equations with damping, its discretizations and their connections with accelerated optimization algorithms for $m$-strongly convex and $L$-smooth functions. In particular, using the Linear Matrix Inequality framework developed in \emph{Fazlyab et. al. $(2018)$}, we derive analytically a (discrete) Lyapunov function for a two-parameter family of Nesterov optimization methods, which allows for a complete characterization of their convergence rate. We then show that in the appropriate limit this family of methods may be seen as a discretization of a family of second order ordinary differential equations, which properties can be also understood by a (continuous) Lyapunov function, which can also be obtained by studying the limiting behaviour of the discrete Lyapunov function. Finally, we show that the majority of typical discretizations of this ODE, such as the Heavy ball method, do not possess suitable discrete Lyapunov functions, and hence fail to reproduce the desired limiting behaviour of this ODE, which in turn implies that their converge rates when seen as optimization methods cannot behave in an "accerelated" manner .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge