The benefits of synthetic data for action categorization

Paper and Code

Jan 20, 2020

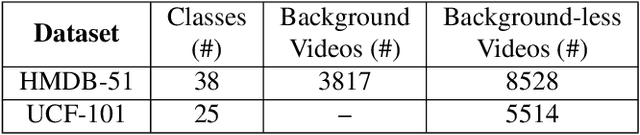

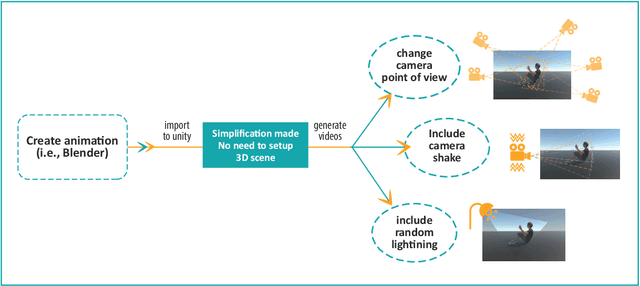

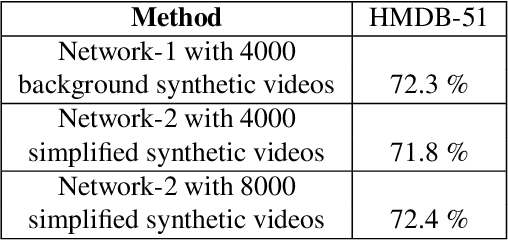

In this paper, we study the value of using synthetically produced videos as training data for neural networks used for action categorization. Motivated by the fact that texture and background of a video play little to no significant roles in optical flow, we generated simplified texture-less and background-less videos and utilized the synthetic data to train a Temporal Segment Network (TSN). The results demonstrated that augmenting TSN with simplified synthetic data improved the original network accuracy (68.5%), achieving 71.8% on HMDB-51 when adding 4,000 videos and 72.4% when adding 8,000 videos. Also, training using simplified synthetic videos alone on 25 classes of UCF-101 achieved 30.71% when trained on 2500 videos and 52.7% when trained on 5000 videos. Finally, results showed that when reducing the number of real videos of UCF-25 to 10% and combining them with synthetic videos, the accuracy drops to only 85.41%, compared to a drop to 77.4% when no synthetic data is added.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge