The Backbone Method for Ultra-High Dimensional Sparse Machine Learning

Paper and Code

Jun 11, 2020

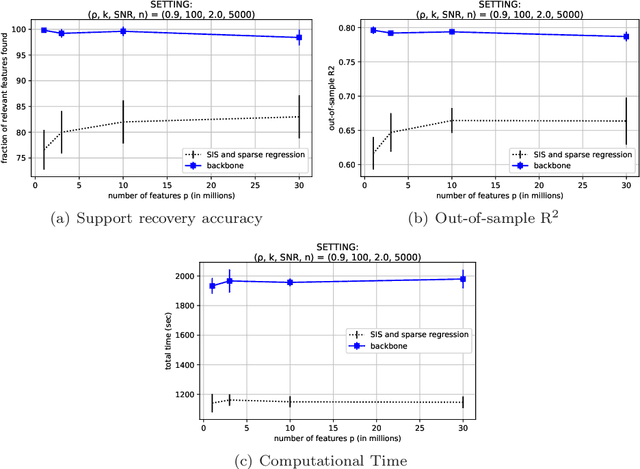

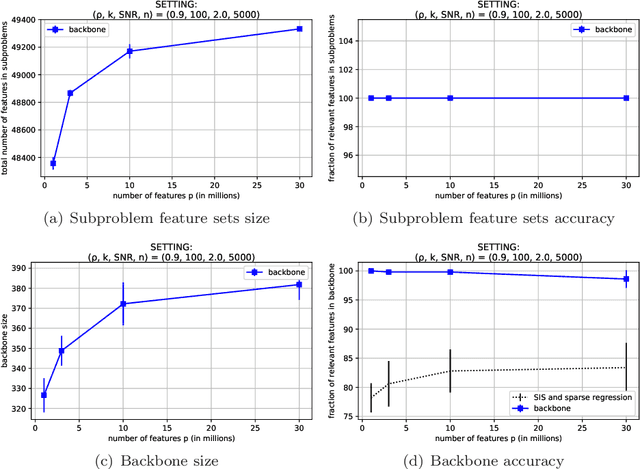

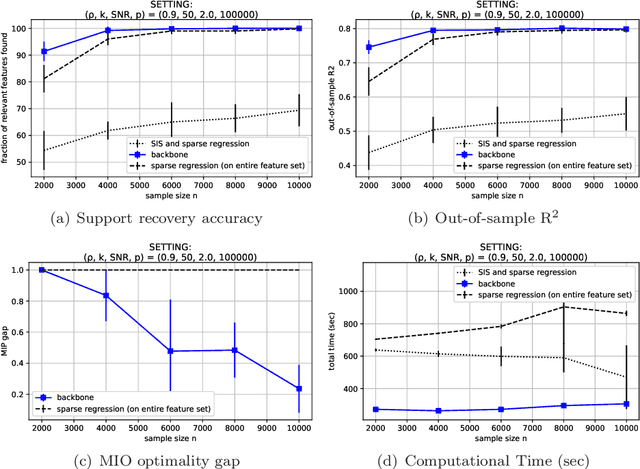

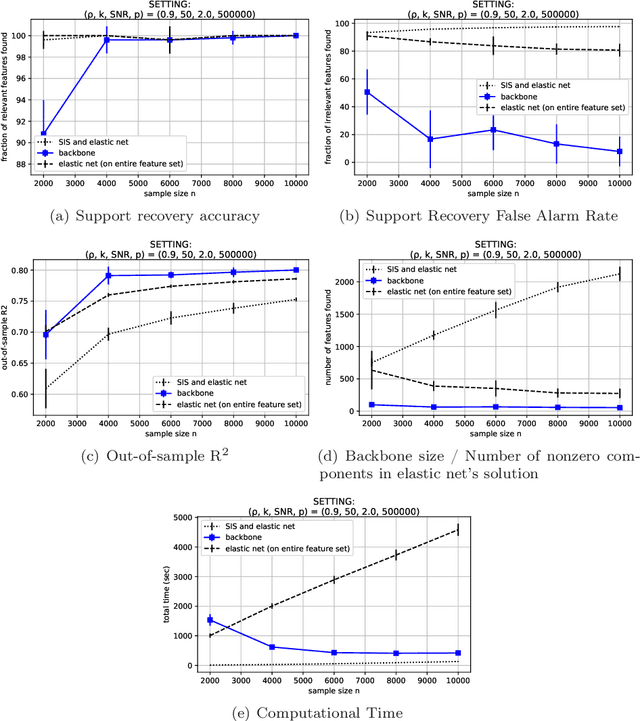

We present the backbone method, a generic framework that enables sparse and interpretable supervised machine learning methods to scale to ultra-high dimensional problems. We solve, in minutes, sparse regression problems with $p\sim10^7$ features and decision tree induction problems with $p\sim10^5$ features. The proposed method operates in two phases; we first determine the backbone set, that consists of potentially relevant features, by solving a number of tractable subproblems; then, we solve a reduced problem, considering only the backbone features. Numerical experiments demonstrate that our method competes with optimal solutions, when exact methods apply, and substantially outperforms baseline heuristics, when exact methods do not scale, both in terms of recovering the true relevant features and in its out-of-sample predictive performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge