The Arm-Swing Is Discriminative in Video Gait Recognition for Athlete Re-Identification

Paper and Code

Jun 21, 2021

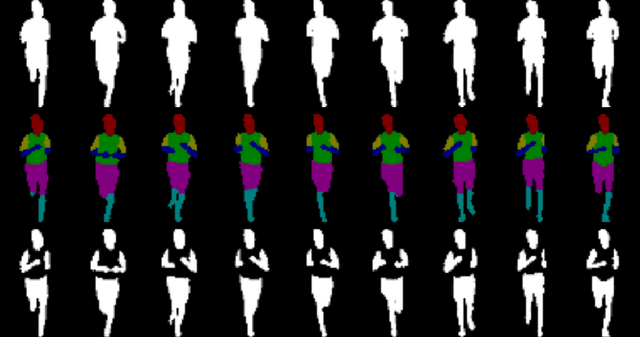

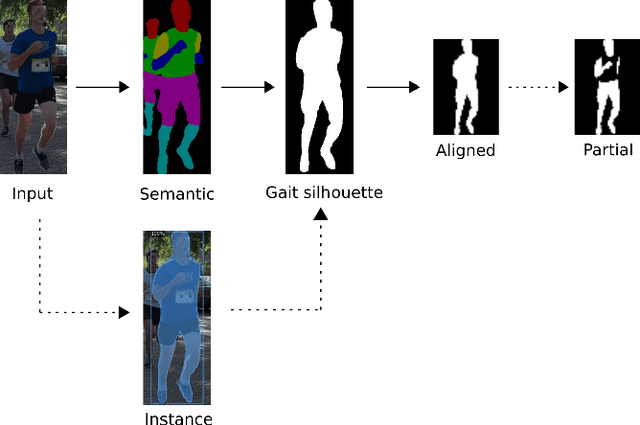

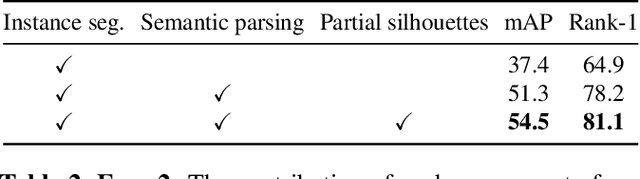

In this paper we evaluate running gait as an attribute for video person re-identification in a long-distance running event. We show that running gait recognition achieves competitive performance compared to appearance-based approaches in the cross-camera retrieval task and that gait and appearance features are complementary to each other. For gait, the arm swing during running is less distinguishable when using binary gait silhouettes, due to ambiguity in the torso region. We propose to use human semantic parsing to create partial gait silhouettes where the torso is left out. Leaving out the torso improves recognition results by allowing the arm swing to be more visible in the frontal and oblique viewing angles, which offers hints that arm swings are somewhat personal. Experiments show an increase of 3.2% mAP on the CampusRun and increased accuracy with 4.8% in the frontal and rear view on CASIA-B, compared to using the full body silhouettes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge