Thanks for Nothing: Predicting Zero-Valued Activations with Lightweight Convolutional Neural Networks

Paper and Code

Sep 17, 2019

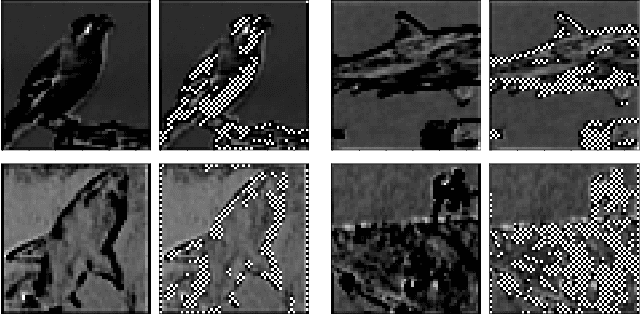

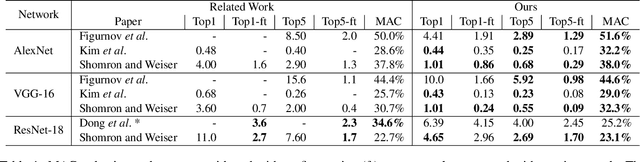

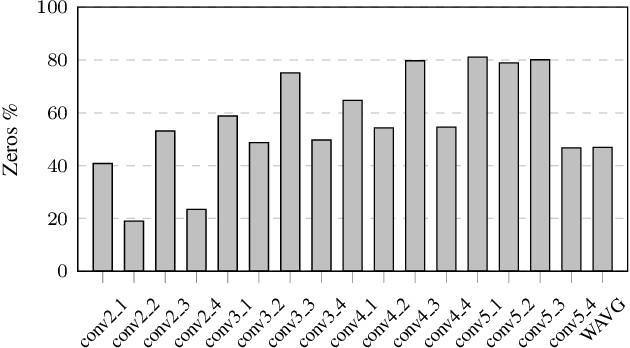

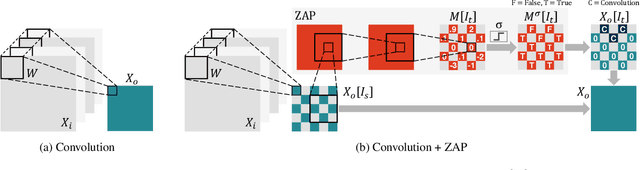

Convolutional neural networks (CNNs) introduce state-of-the-art results for various tasks with the price of high computational demands. Inspired by the observation that spatial correlation exists in CNN output feature maps (ofms), we propose a method to dynamically predict whether ofm activations are zero-valued or not according to their neighboring activation values, thereby avoiding zero-valued activations and reducing the number of convolution operations. We implement the zero activation predictor (ZAP) with a lightweight CNN, which imposes negligible overheads and is easy to deploy and train. Furthermore, the same ZAP can be tuned to many different operating points along the accuracy-savings trade-off curve. For example, using VGG-16 and the ILSVRC-2012 dataset, different operating points achieve a reduction of 23.5% and 32.3% multiply-accumulate (MAC) operations with top-1/top-5 accuracy degradation of 0.3%/0.1% and 1%/0.5% without fine-tuning, respectively. Considering one-epoch fine-tuning, 41.7% MAC operations may be reduced with 1.1%/0.52% accuracy degradation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge