Text Normalization using Memory Augmented Neural Networks

Paper and Code

Jul 06, 2018

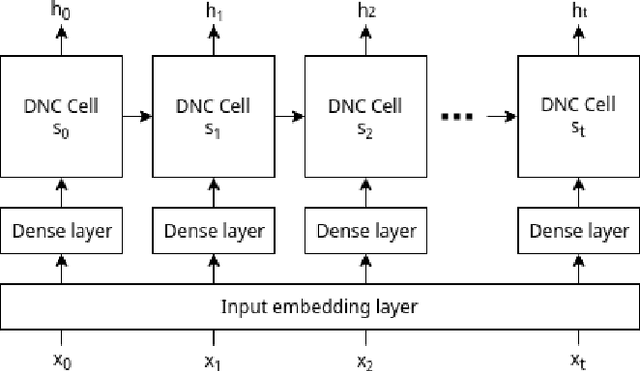

We perform text normalization, i.e. the transformation of words from the written to the spoken form, using a memory augmented neural network. With the addition of dynamic memory access and storage mechanism, we present a neural architecture that will serve as a language agnostic text normalization system while avoiding the kind of unacceptable errors made by the LSTM based recurrent neural networks. By reducing the number of unacceptable mistakes, we show that such a novel architecture is indeed a better alternative. Our proposed system requires significantly lesser amounts of data, training time and compute resources. Although a few occurrences of these errors still remain in certain semiotic classes, we demonstrate that memory augmented networks with meta-learning capabilities can open many doors to a superior text normalization system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge