TensorPlan and the Few Actions Lower Bound for Planning in MDPs under Linear Realizability of Optimal Value Functions

Paper and Code

Oct 05, 2021

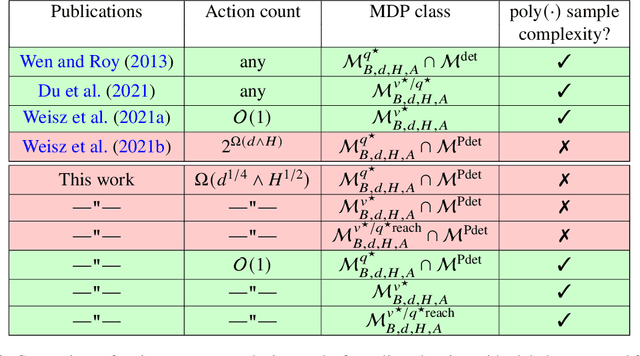

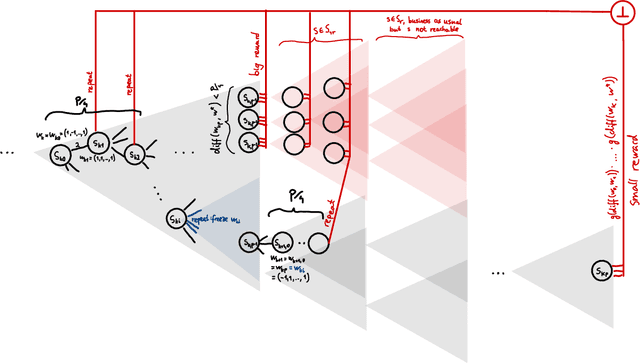

We consider the minimax query complexity of online planning with a generative model in fixed-horizon Markov decision processes (MDPs) with linear function approximation. Following recent works, we consider broad classes of problems where either (i) the optimal value function $v^\star$ or (ii) the optimal action-value function $q^\star$ lie in the linear span of some features; or (iii) both $v^\star$ and $q^\star$ lie in the linear span when restricted to the states reachable from the starting state. Recently, Weisz et al. (2021b) showed that under (ii) the minimax query complexity of any planning algorithm is at least exponential in the horizon $H$ or in the feature dimension $d$ when the size $A$ of the action set can be chosen to be exponential in $\min(d,H)$. On the other hand, for the setting (i), Weisz et al. (2021a) introduced TensorPlan, a planner whose query cost is polynomial in all relevant quantities when the number of actions is fixed. Among other things, these two works left open the question whether polynomial query complexity is possible when $A$ is subexponential in $min(d,H)$. In this paper we answer this question in the negative: we show that an exponentially large lower bound holds when $A=\Omega(\min(d^{1/4},H^{1/2}))$, under either (i), (ii) or (iii). In particular, this implies a perhaps surprising exponential separation of query complexity compared to the work of Du et al. (2021) who prove a polynomial upper bound when (iii) holds for all states. Furthermore, we show that the upper bound of TensorPlan can be extended to hold under (iii) and, for MDPs with deterministic transitions and stochastic rewards, also under (ii).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge