Template-Based Financial Report Generation in Agentic and Decomposed Information Retrieval

Paper and Code

Apr 19, 2025

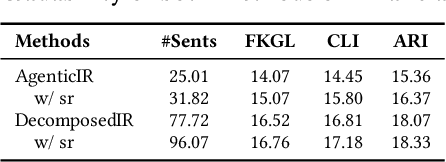

Tailoring structured financial reports from companies' earnings releases is crucial for understanding financial performance and has been widely adopted in real-world analytics. However, existing summarization methods often generate broad, high-level summaries, which may lack the precision and detail required for financial reports that typically focus on specific, structured sections. While Large Language Models (LLMs) hold promise, generating reports adhering to predefined multi-section templates remains challenging. This paper investigates two LLM-based approaches popular in industry for generating templated financial reports: an agentic information retrieval (IR) framework and a decomposed IR approach, namely AgenticIR and DecomposedIR. The AgenticIR utilizes collaborative agents prompted with the full template. In contrast, the DecomposedIR approach applies a prompt chaining workflow to break down the template and reframe each section as a query answered by the LLM using the earnings release. To quantitatively assess the generated reports, we evaluated both methods in two scenarios: one using a financial dataset without direct human references, and another with a weather-domain dataset featuring expert-written reports. Experimental results show that while AgenticIR may excel in orchestrating tasks and generating concise reports through agent collaboration, DecomposedIR statistically significantly outperforms AgenticIR approach in providing broader and more detailed coverage in both scenarios, offering reflection on the utilization of the agentic framework in real-world applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge