Teaching Key Machine Learning Principles Using Anti-learning Datasets

Paper and Code

Nov 16, 2020

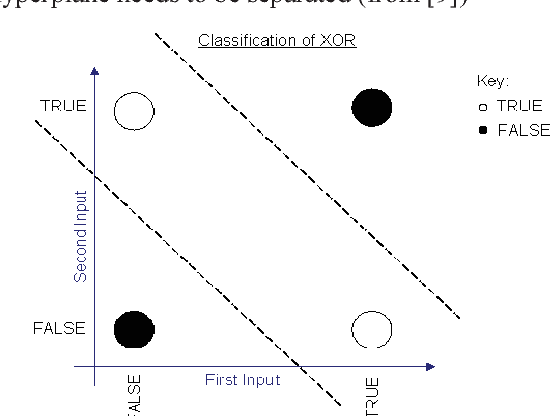

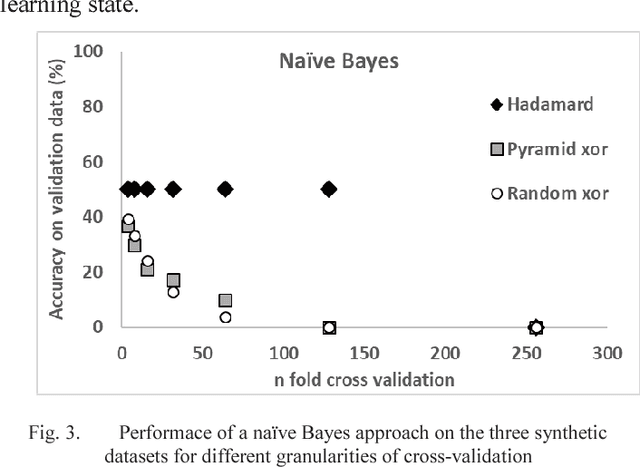

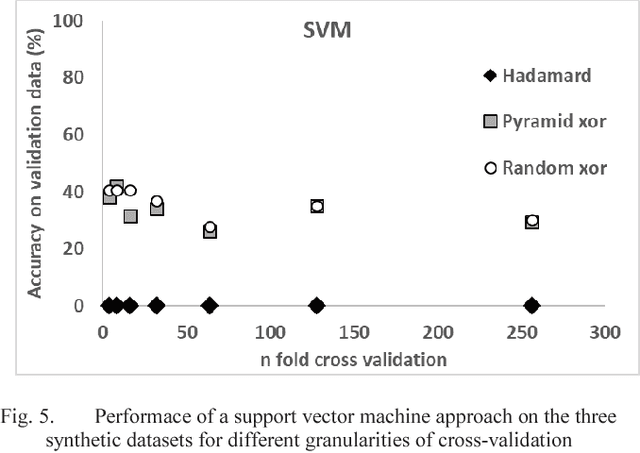

Much of the teaching of machine learning focuses on iterative hill-climbing approaches and the use of local knowledge to gain information leading to local or global maxima. In this paper we advocate the teaching of alternative methods of generalising to the best possible solution, including a method called anti-learning. By using simple teaching methods, students can achieve a deeper understanding of the importance of validation on data excluded from the training process and that each problem requires its own methods to solve. We also exemplify the requirement to train a model using sufficient data by showing that different granularities of cross-validation can yield very different results.

* 2018 IEEE International Conference on Teaching, Assessment, and

Learning for Engineering (TALE), Pages 960-964

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge