TaylorImNet for Fast 3D Shape Reconstruction Based on Implicit Surface Function

Paper and Code

Jan 18, 2022

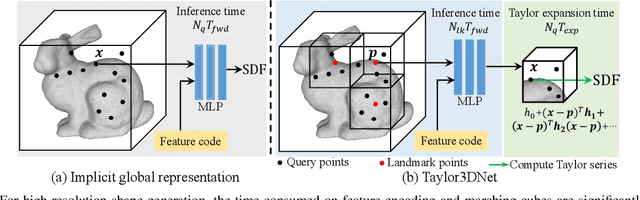

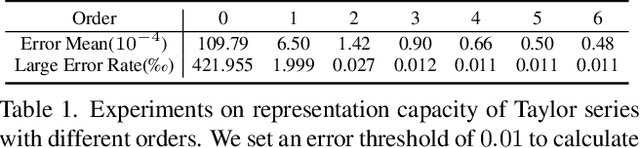

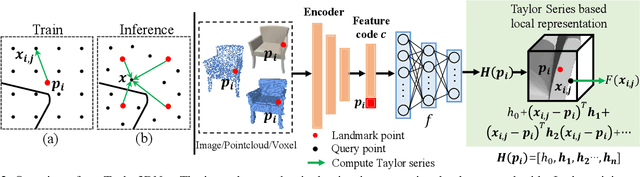

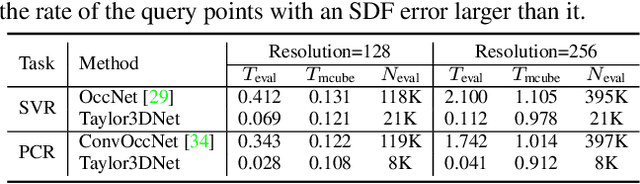

Benefiting from the contiguous representation ability, deep implicit functions can extract the iso-surface of a shape at arbitrary resolution. However, utilizing the neural network with a large number of parameters as the implicit function prevents the generation speed of high-resolution topology because it needs to forward a large number of query points into the network. In this work, we propose TaylorImNet inspired by the Taylor series for implicit 3D shape representation. TaylorImNet exploits a set of discrete expansion points and corresponding Taylor series to model a contiguous implicit shape field. After the expansion points and corresponding coefficients are obtained, our model only needs to calculate the Taylor series to evaluate each point and the number of expansion points is independent of the generating resolution. Based on this representation, our TaylorImNet can achieve a significantly faster generation speed than other baselines. We evaluate our approach on reconstruction tasks from various types of input, and the experimental results demonstrate that our approach can get slightly better performance than existing state-of-the-art baselines while improving the inference speed with a large margin.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge