Task-aware Privacy Preservation for Multi-dimensional Data

Paper and Code

Oct 05, 2021

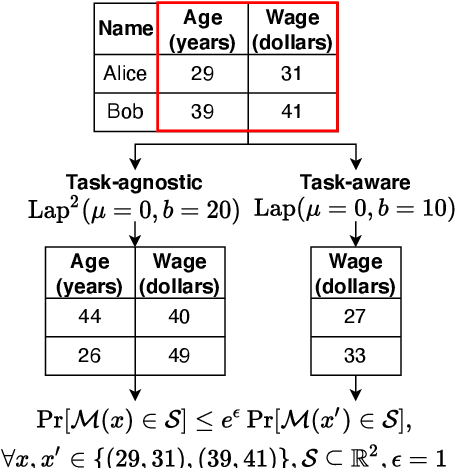

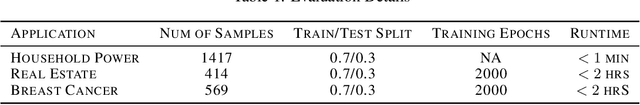

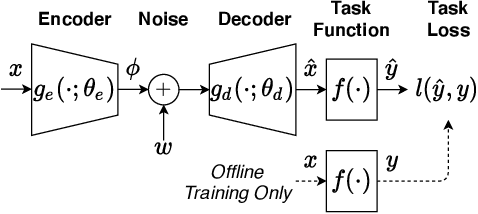

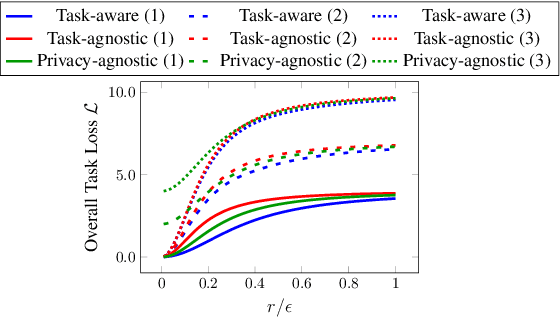

Local differential privacy (LDP), a state-of-the-art technique for privacy preservation, has been successfully deployed in a few real-world applications. In the future, LDP can be adopted to anonymize richer user data attributes that will be input to more sophisticated machine learning (ML) tasks. However, today's LDP approaches are largely task-agnostic and often lead to sub-optimal performance -- they will simply inject noise to all data attributes according to a given privacy budget, regardless of what features are most relevant for an ultimate task. In this paper, we address how to significantly improve the ultimate task performance for multi-dimensional user data by considering a task-aware privacy preservation problem. The key idea is to use an encoder-decoder framework to learn (and anonymize) a task-relevant latent representation of user data, which gives an analytical near-optimal solution for a linear setting with mean-squared error (MSE) task loss. We also provide an approximate solution through a learning algorithm for general nonlinear cases. Extensive experiments demonstrate that our task-aware approach significantly improves ultimate task accuracy compared to a standard benchmark LDP approach while guaranteeing the same level of privacy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge