Task Allocation with Load Management in Multi-Agent Teams

Paper and Code

Jul 17, 2022

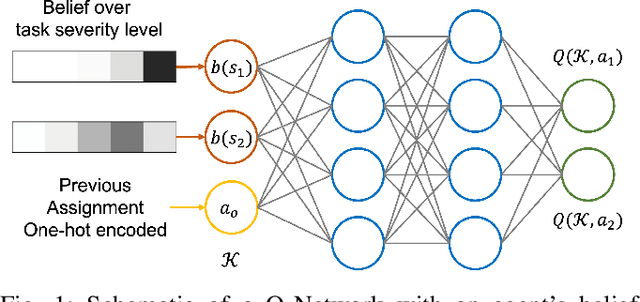

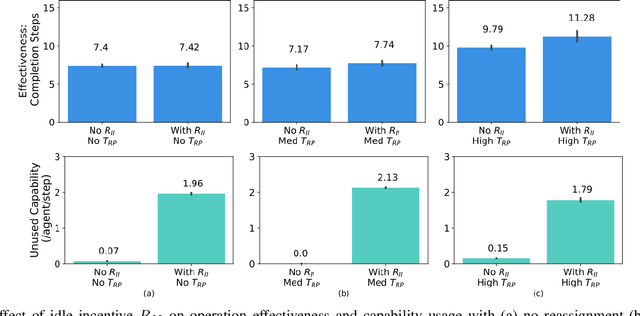

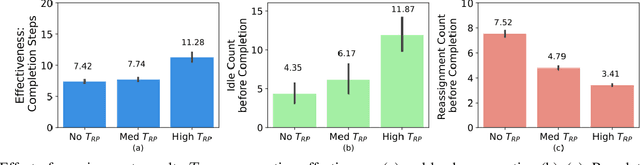

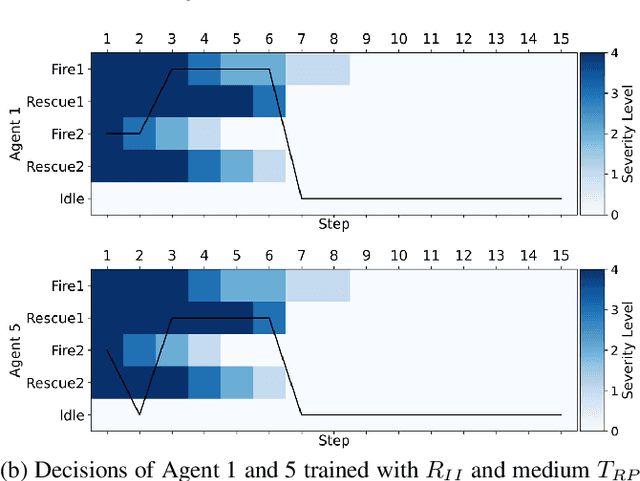

In operations of multi-agent teams ranging from homogeneous robot swarms to heterogeneous human-autonomy teams, unexpected events might occur. While efficiency of operation for multi-agent task allocation problems is the primary objective, it is essential that the decision-making framework is intelligent enough to manage unexpected task load with limited resources. Otherwise, operation effectiveness would drastically plummet with overloaded agents facing unforeseen risks. In this work, we present a decision-making framework for multi-agent teams to learn task allocation with the consideration of load management through decentralized reinforcement learning, where idling is encouraged and unnecessary resource usage is avoided. We illustrate the effect of load management on team performance and explore agent behaviors in example scenarios. Furthermore, a measure of agent importance in collaboration is developed to infer team resilience when facing handling potential overload situations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge