Target Network and Truncation Overcome The Deadly triad in $Q$-Learning

Paper and Code

Mar 05, 2022

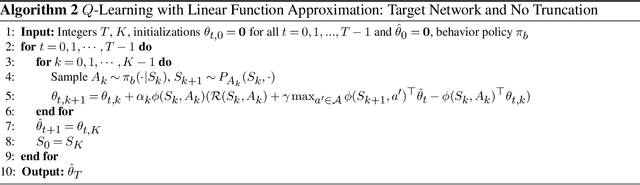

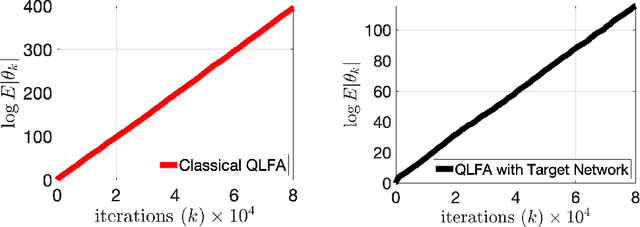

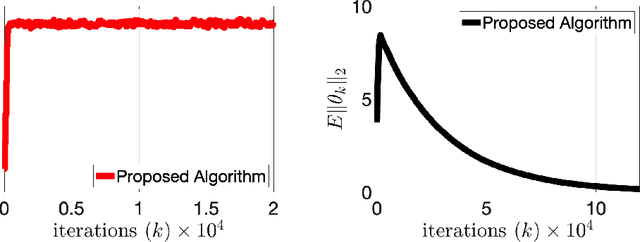

$Q$-learning with function approximation is one of the most empirically successful while theoretically mysterious reinforcement learning (RL) algorithms, and was identified in Sutton (1999) as one of the most important theoretical open problems in the RL community. Even in the basic linear function approximation setting, there are well-known divergent examples. In this work, we propose a stable design for $Q$-learning with linear function approximation using target network and truncation, and establish its finite-sample guarantees. Our result implies an $\mathcal{O}(\epsilon^{-2})$ sample complexity up to a function approximation error. This is the first variant of $Q$-learning with linear function approximation that is provably stable without requiring strong assumptions or modifying the problem parameters, and achieves the optimal sample complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge