TArC: Tunisian Arabish Corpus First complete release

Paper and Code

Jul 11, 2022

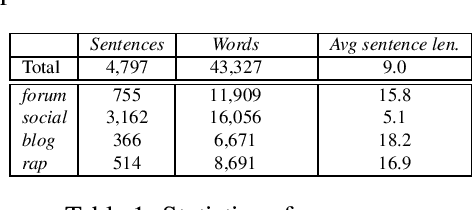

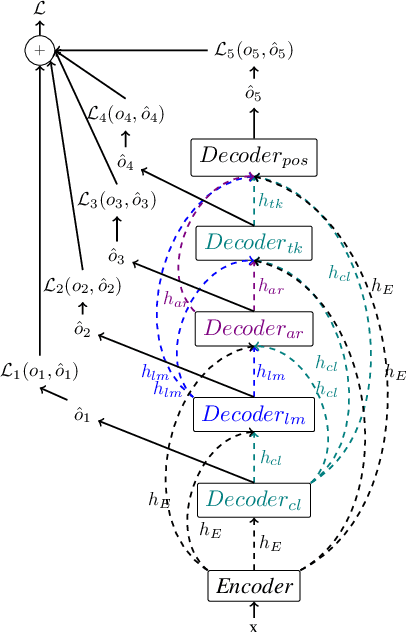

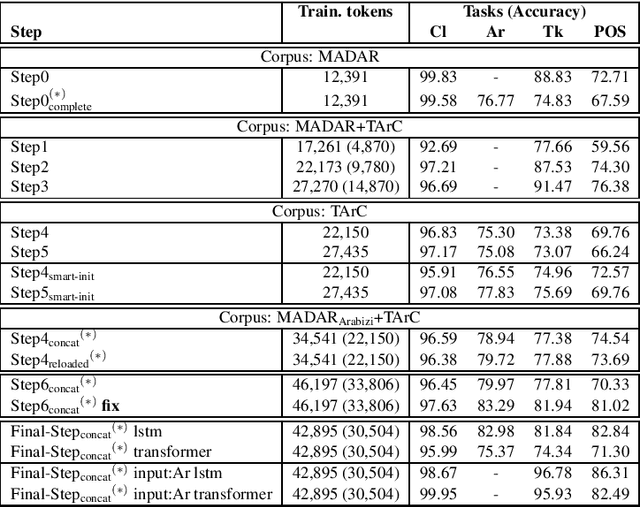

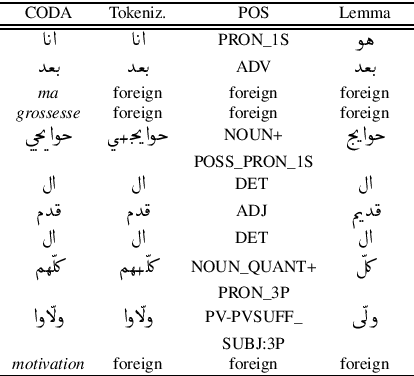

In this paper we present the final result of a project on Tunisian Arabic encoded in Arabizi, the Latin-based writing system for digital conversations. The project led to the creation of two integrated and independent resources: a corpus and a NLP tool created to annotate the former with various levels of linguistic information: word classification, transliteration, tokenization, POS-tagging, lemmatization. We discuss our choices in terms of computational and linguistic methodology and the strategies adopted to improve our results. We report on the experiments performed in order to outline our research path. Finally, we explain why we believe in the potential of these resources for both computational and linguistic researches. Keywords: Tunisian Arabizi, Annotated Corpus, Neural Network Architecture

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge