Tailoring Personality Traits in Large Language Models via Unsupervisedly-Built Personalized Lexicons

Paper and Code

Oct 25, 2023

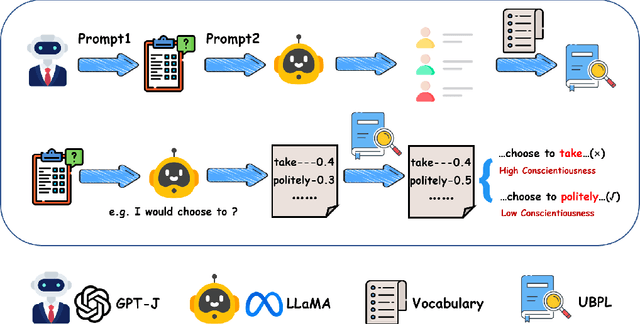

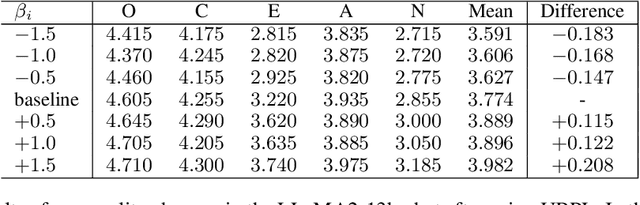

Personality plays a pivotal role in shaping human expression patterns, and empowering and manipulating large language models (LLMs) with personality traits holds significant promise in enhancing the user experience of LLMs. However, prior approaches either rely on fine-tuning LLMs on a corpus enriched with personalized expressions or necessitate the manual crafting of prompts to induce LLMs to produce personalized responses. The former approaches demand substantial time and resources for collecting sufficient training examples while the latter might fail in enabling the precise manipulation of the personality traits at a fine-grained level (e.g., achieving high agreeableness while reducing openness). In this study, we introduce a novel approach for tailoring personality traits within LLMs, allowing for the incorporation of any combination of the Big Five factors (i.e., openness, conscientiousness, extraversion, agreeableness, and neuroticism) in a pluggable manner. This is achieved by employing a set of Unsupervisedly-Built Personalized Lexicons (UBPL) that are utilized to adjust the probability of the next token predicted by the original LLMs during the decoding phase. This adjustment encourages the models to generate words present in the personalized lexicons while preserving the naturalness of the generated texts. Extensive experimentation demonstrates the effectiveness of our approach in finely manipulating LLMs' personality traits. Furthermore, our method can be seamlessly integrated into other LLMs without necessitating updates to their parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge