TabM: Advancing Tabular Deep Learning with Parameter-Efficient Ensembling

Paper and Code

Oct 31, 2024

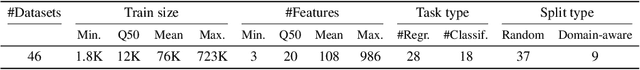

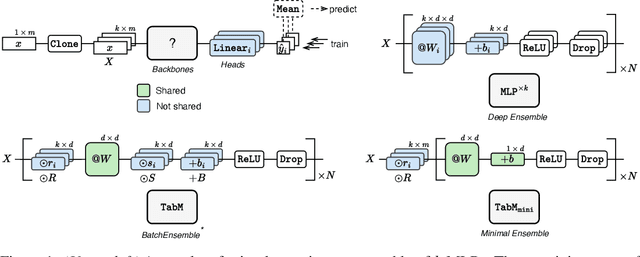

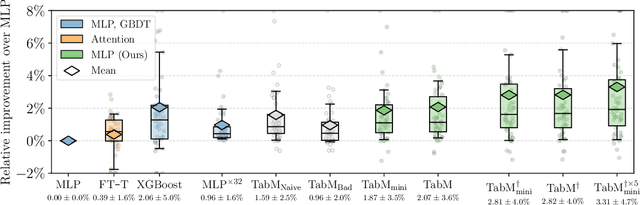

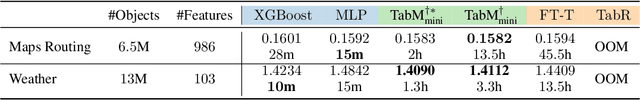

Deep learning architectures for supervised learning on tabular data range from simple multilayer perceptrons (MLP) to sophisticated Transformers and retrieval-augmented methods. This study highlights a major, yet so far overlooked opportunity for substantially improving tabular MLPs: namely, parameter-efficient ensembling -- a paradigm for implementing an ensemble of models as one model producing multiple predictions. We start by developing TabM -- a simple model based on MLP and our variations of BatchEnsemble (an existing technique). Then, we perform a large-scale evaluation of tabular DL architectures on public benchmarks in terms of both task performance and efficiency, which renders the landscape of tabular DL in a new light. Generally, we show that MLPs, including TabM, form a line of stronger and more practical models compared to attention- and retrieval-based architectures. In particular, we find that TabM demonstrates the best performance among tabular DL models. Lastly, we conduct an empirical analysis on the ensemble-like nature of TabM. For example, we observe that the multiple predictions of TabM are weak individually, but powerful collectively. Overall, our work brings an impactful technique to tabular DL, analyses its behaviour, and advances the performance-efficiency trade-off with TabM -- a simple and powerful baseline for researchers and practitioners.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge