Synthesized Speech Detection Using Convolutional Transformer-Based Spectrogram Analysis

Paper and Code

May 03, 2022

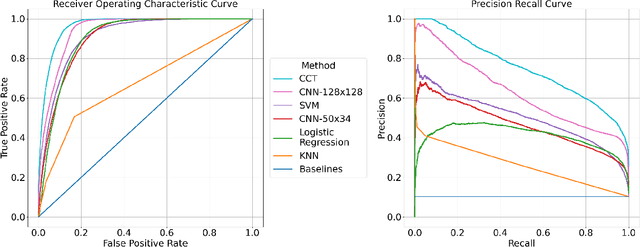

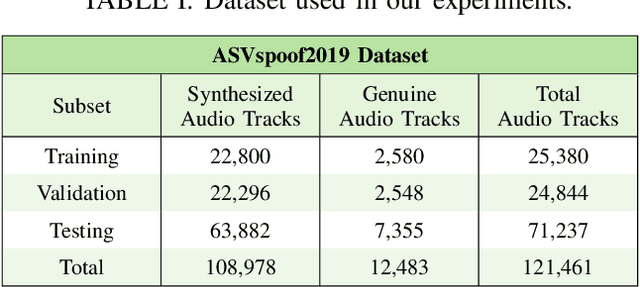

Synthesized speech is common today due to the prevalence of virtual assistants, easy-to-use tools for generating and modifying speech signals, and remote work practices. Synthesized speech can also be used for nefarious purposes, including creating a purported speech signal and attributing it to someone who did not speak the content of the signal. We need methods to detect if a speech signal is synthesized. In this paper, we analyze speech signals in the form of spectrograms with a Compact Convolutional Transformer (CCT) for synthesized speech detection. A CCT utilizes a convolutional layer that introduces inductive biases and shared weights into a network, allowing a transformer architecture to perform well with fewer data samples used for training. The CCT uses an attention mechanism to incorporate information from all parts of a signal under analysis. Trained on both genuine human voice signals and synthesized human voice signals, we demonstrate that our CCT approach successfully differentiates between genuine and synthesized speech signals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge