Syntax-driven Iterative Expansion Language Models for Controllable Text Generation

Paper and Code

Apr 05, 2020

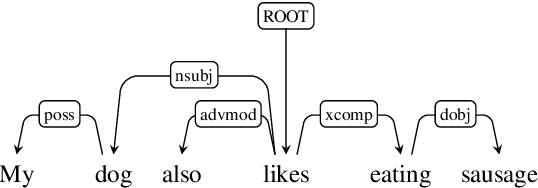

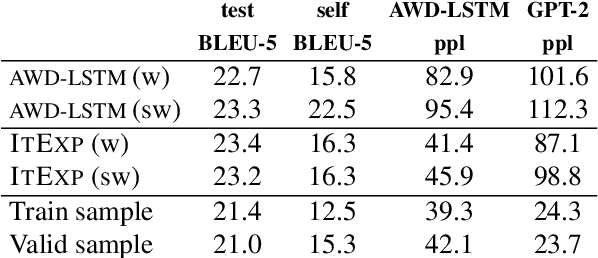

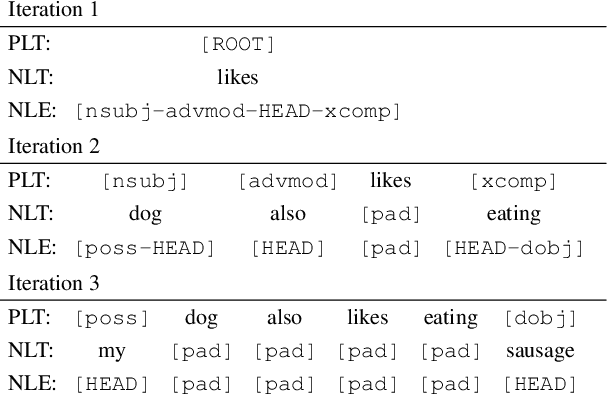

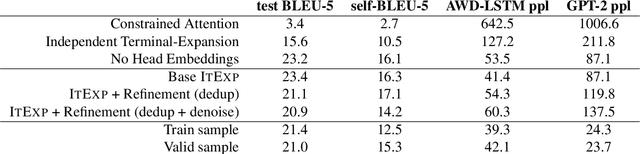

The dominant language modeling paradigms handle text as a sequence of discrete tokens. While these approaches can capture the latent structure of the text, they are inherently constrained to sequential dynamics for text generation. We propose a new paradigm for introducing a syntactic inductive bias into neural language modeling and text generation, where the dependency parse tree is used to drive the Transformer model to generate sentences iteratively, starting from a root placeholder and generating the tokens of the different dependency tree branches in parallel, using either word or subword vocabularies. Our experiments show that this paradigm is effective for text generation, with quality and diversity comparable or superior to those of sequential baselines, and how its inherently controllable generation process enables control over the output syntactic constructions, allowing the induction of stylistic variations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge