Surrogate-assisted Bayesian inversion for landscape and basin evolution models

Paper and Code

Dec 12, 2018

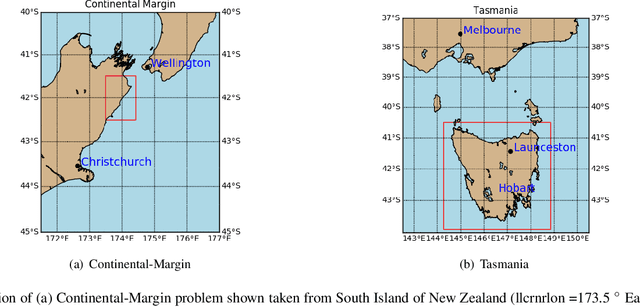

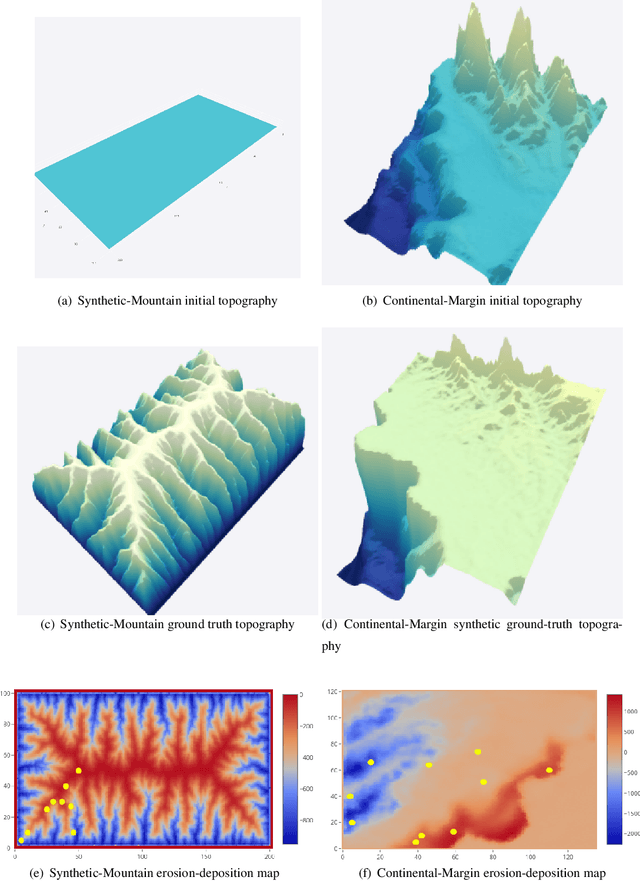

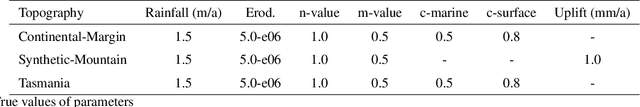

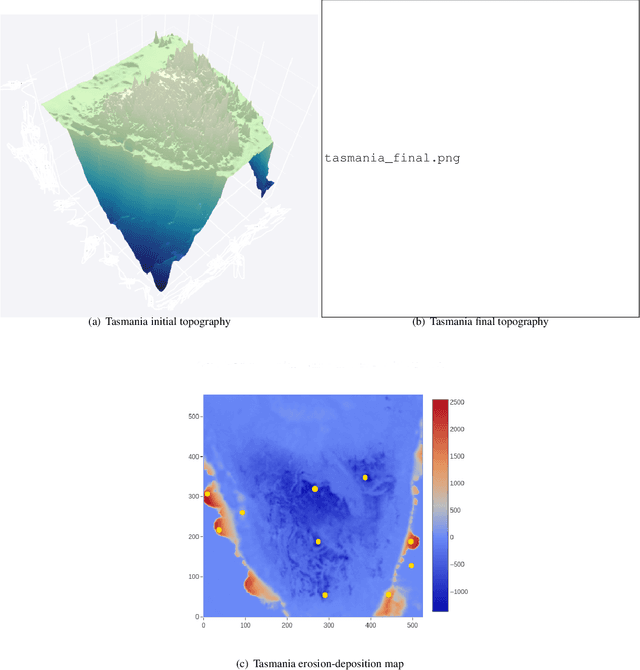

The complex and computationally expensive features of the forward landscape and sedimentary basin evolution models pose a major challenge in the development of efficient inference and optimization methods. Bayesian inference provides a methodology for estimation and uncertainty quantification of free model parameters. In our previous work, parallel tempering Bayeslands was developed as a framework for parameter estimation and uncertainty quantification for the landscape and basin evolution modelling software Badlands. Parallel tempering Bayeslands features high-performance computing with dozens of processing cores running in parallel to enhance computational efficiency. Although parallel computing is used, the procedure remains computationally challenging since thousands of samples need to be drawn and evaluated. In large-scale landscape and basin evolution problems, a single model evaluation can take from several minutes to hours, and in certain cases, even days. Surrogate-assisted optimization has been with successfully applied to a number of engineering problems. This motivates its use in optimisation and inference methods suited for complex models in geology and geophysics. Surrogates can speed up parallel tempering Bayeslands by developing computationally inexpensive surrogates to mimic expensive models. In this paper, we present an application of surrogate-assisted parallel tempering where that surrogate mimics a landscape evolution model including erosion, sediment transport and deposition, by estimating the likelihood function that is given by the model. We employ a machine learning model as a surrogate that learns from the samples generated by the parallel tempering algorithm. The results show that the methodology is effective in lowering the overall computational cost significantly while retaining the quality of solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge