Surpassing Cosine Similarity for Multidimensional Comparisons: Dimension Insensitive Euclidean Metric (DIEM)

Paper and Code

Jul 11, 2024

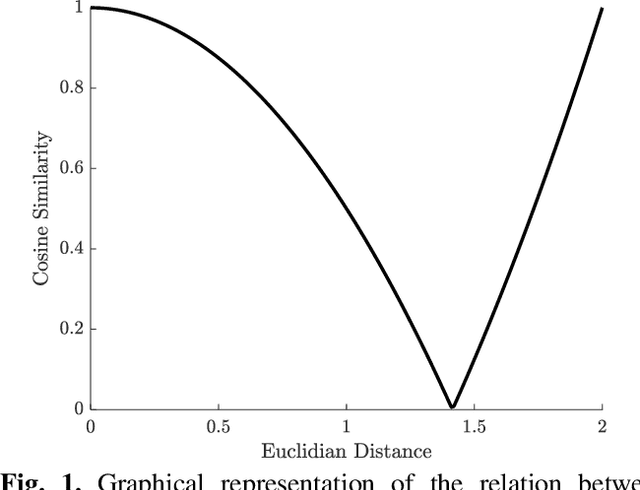

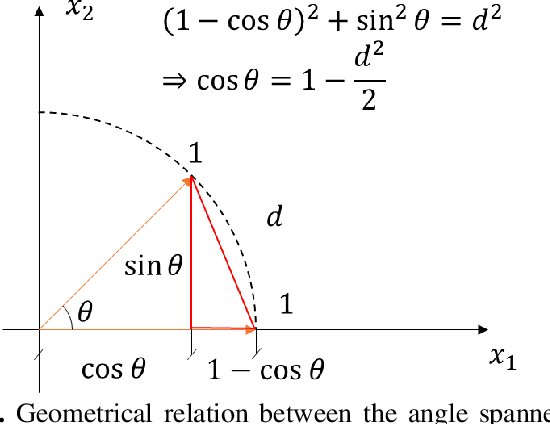

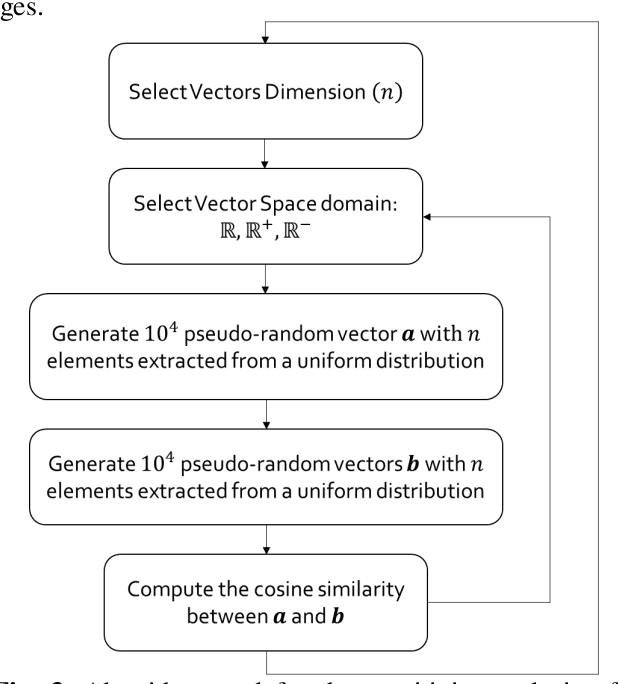

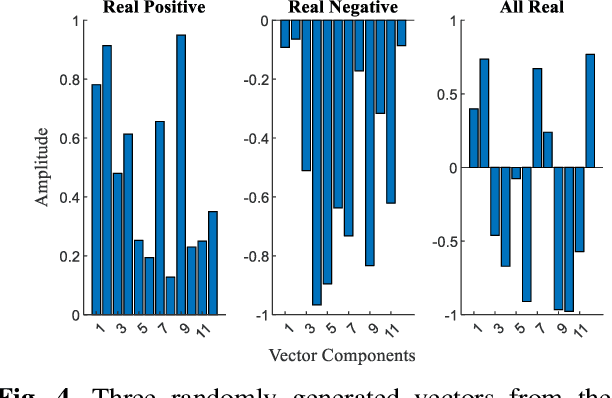

The advancement in computational power and hardware efficiency has enabled the tackling of increasingly complex and high-dimensional problems. While artificial intelligence (AI) has achieved remarkable results in various scientific and technological fields, the interpretability of these high-dimensional solutions remains challenging. A critical issue in this context is the comparison of multidimensional quantities, which is essential in techniques like Principal Component Analysis (PCA), Singular Value Decomposition (SVD), and k-means clustering. Common metrics such as cosine similarity, Euclidean distance, and Manhattan distance are often used for such comparisons - for example in muscular synergies of the human motor control system. However, their applicability and interpretability diminish as dimensionality increases. This paper provides a comprehensive analysis of the effects of dimensionality on these three widely used metrics. Our results reveal significant limitations of cosine similarity, particularly its dependency on the dimensionality of the vectors, leading to biased and less interpretable outcomes. To address this, we introduce the Dimension Insensitive Euclidean Metric (DIEM), derived from the Euclidean distance, which demonstrates superior robustness and generalizability across varying dimensions. DIEM maintains consistent variability and eliminates the biases observed in traditional metrics, making it a more reliable tool for high-dimensional comparisons. This novel metric has the potential to replace cosine similarity, providing a more accurate and insightful method to analyze multidimensional data in fields ranging from neuromotor control to machine learning and deep learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge