Support Relation Analysis for Objects in Multiple View RGB-D Images

Paper and Code

May 10, 2019

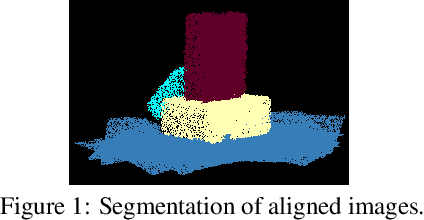

Understanding physical relations between objects, especially their support relations, is crucial for robotic manipulation. There has been work on reasoning about support relations and structural stability of simple configurations in RGB-D images. In this paper, we propose a method for extracting more detailed physical knowledge from a set of RGB-D images taken from the same scene but from different views using qualitative reasoning and intuitive physical models. Rather than providing a simple contact relation graph and approximating stability over convex shapes, our method is able to provide a detailed supporting relation analysis based on a volumetric representation. Specifically, true supporting relations between objects (e.g., if an object supports another object by touching it on the side or if the object above contributes to the stability of the object below) are identified. We apply our method to real-world structures captured in warehouse scenarios and show our method works as desired.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge