SuPLE: Robot Learning with Lyapunov Rewards

Paper and Code

Nov 20, 2024

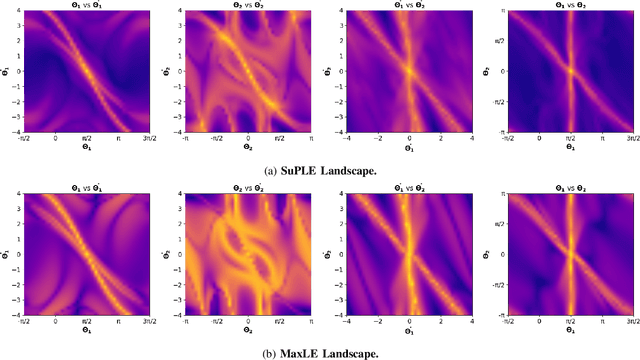

The reward function is an essential component in robot learning. Reward directly affects the sample and computational complexity of learning, and the quality of a solution. The design of informative rewards requires domain knowledge, which is not always available. We use the properties of the dynamics to produce system-appropriate reward without adding external assumptions. Specifically, we explore an approach to utilize the Lyapunov exponents of the system dynamics to generate a system-immanent reward. We demonstrate that the `Sum of the Positive Lyapunov Exponents' (SuPLE) is a strong candidate for the design of such a reward. We develop a computational framework for the derivation of this reward, and demonstrate its effectiveness on classical benchmarks for sample-based stabilization of various dynamical systems. It eliminates the need to start the training trajectories at arbitrary states, also known as auxiliary exploration. While the latter is a common practice in simulated robot learning, it is unpractical to consider to use it in real robotic systems, since they typically start from natural rest states such as a pendulum at the bottom, a robot on the ground, etc. and can not be easily initialized at arbitrary states. Comparing the performance of SuPLE to commonly-used reward functions, we observe that the latter fail to find a solution without auxiliary exploration, even for the task of swinging up the double pendulum and keeping it stable at the upright position, a prototypical scenario for multi-linked robots. SuPLE-induced rewards for robot learning offer a novel route for effective robot learning in typical as opposed to highly specialized or fine-tuned scenarios. Our code is publicly available for reproducibility and further research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge